.svg)

People don’t have clean water because they’re living next to OpenAI data centers, training of Llama 3.1 created CO2 emissions of roughly 133 lifetimes of a car, while unemployment could hit 25% among recent grads – all this news makes us wonder whether using AI is justified. And, spoiler, not all use.

As with any technology in the world, when we start considering adding it everywhere, we need to pause, reflect, and see what possible consequences it may have.

So, this blog post invites you to do just that: to be informed of what ethical dilemmas AI raises, how we can minimize its harm on our environment and society, and how to be on the right side of history with your AI implementation.

Simply put, Ethical AI is the use of artificial intelligence that doesn’t harm neither humans, nor the planet. When getting into details, the AI ethics has many aspects, which one or another way highlight the possibility of bad influence this technology has on the world, as well as the practicality and purpose of its use. Main principles include:

Those are the foundation of AI Ethics by UNESCO, and considered to be the golden standard for anyone who wants to use or implement artificial intelligence ethically.

The other variations of ethical AI principles are also described by Harvard University, where they distinguish five of them: fairness, transparency, accountability, privacy and security.

A few years ago, when AI was still some sort of laboratory miracle rather than an everyday necessity of every single human being, ethical AI was mostly around data privacy, and how such industries, like Healthcare, can implement AI without corrupting anyone's personal information.

Nowadays, when we have AI more accessible, and there is literally no company that doesn’t have an “AI” stage in their business strategy, the world came to a realization that there are more and more ethical questions around it.

The first, and the oldest concern is that AI can produce biased results, which potentially can harm society. For example, if a company uses AI to review applications for a manager's position, the technology may prioritize male candidates, since due to historical inequality and prejudices around female managers, the data might suggest that it would be a better option.

Generative AI may hallucinate and create inaccurate content. This may lead to disinformation, flawed decision-making, and, eventually, harmful consequences for people using the tool, and the business providing it.

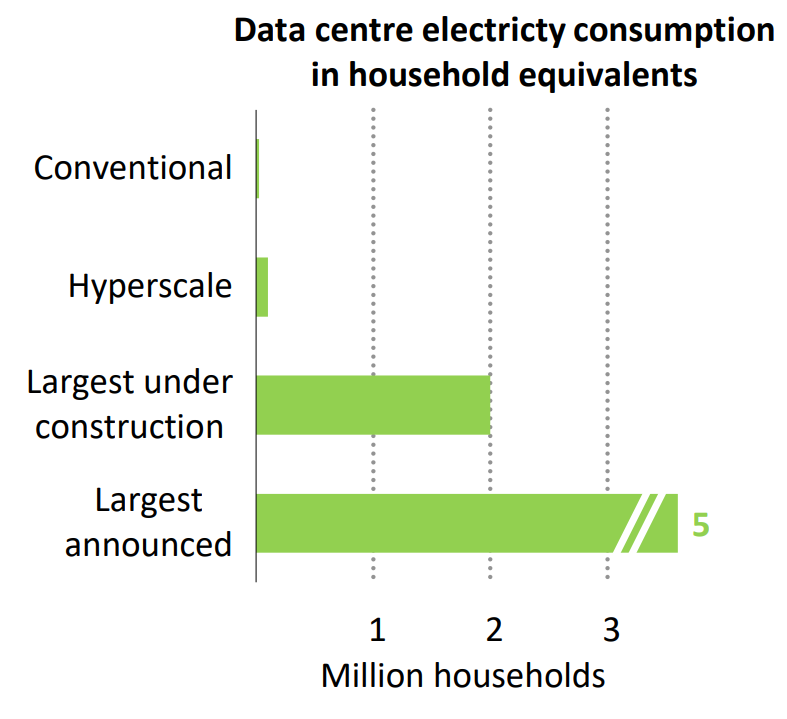

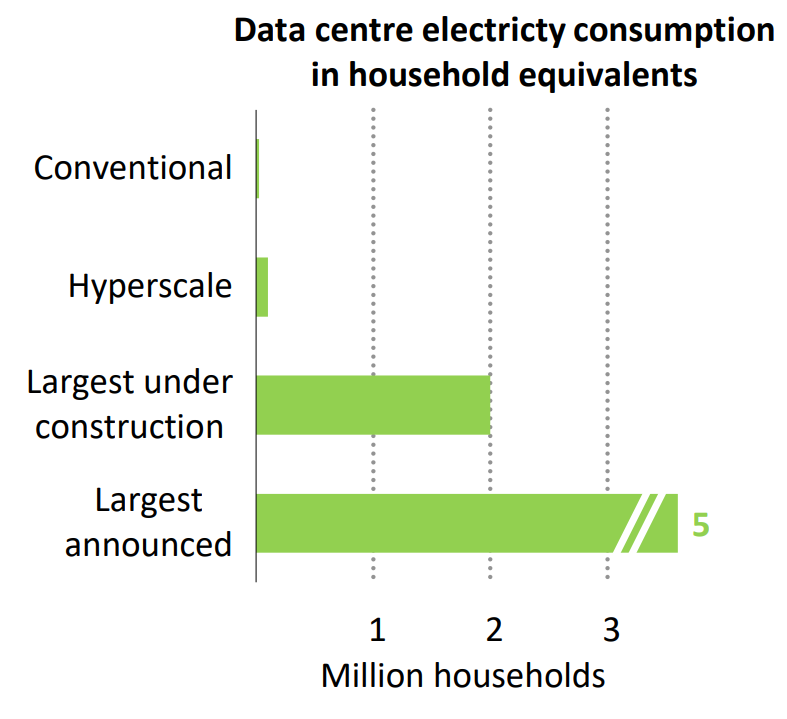

Recent researches showed that usage of generative AI requires a lot of electricity, water, and produces massive amounts of CO2 emissions.

For example, the report by the International Energy Agency explains how “a 100 MW data centre can consume as much electricity as 100 000 households”.

The IMD report, collected fully out of the annual reports of big companies powering AI, shares the unbelievably huge water and power consumption of Google, Microsoft and Meta.

The news report we’ve mentioned in the introduction about how people living next to OpenAI data centers have no access to clean water, raises concerns of co-existing of humans next to AI.

This is also backed by “Energy and AI” report data, which states that the proximity of data centers, which AI requires an awful lot, to the urban areas is the closest of all other facilities.

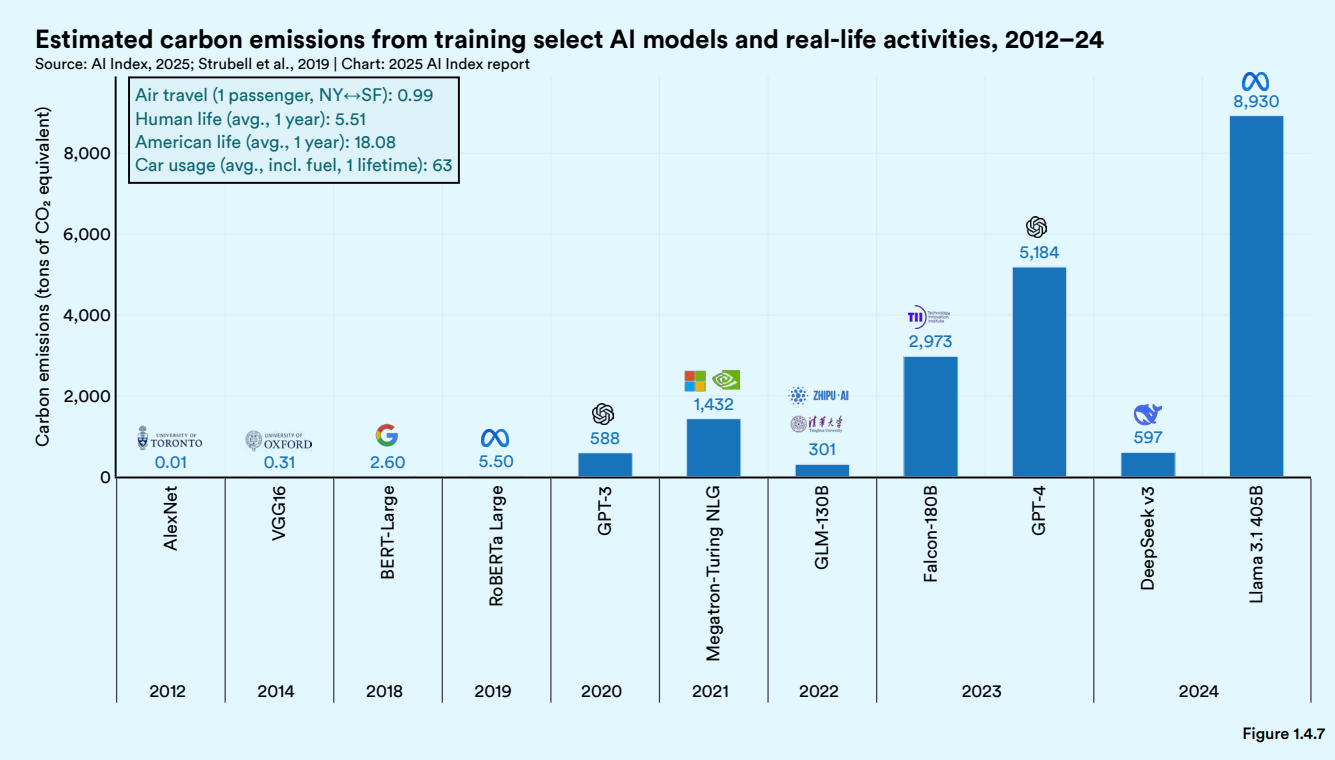

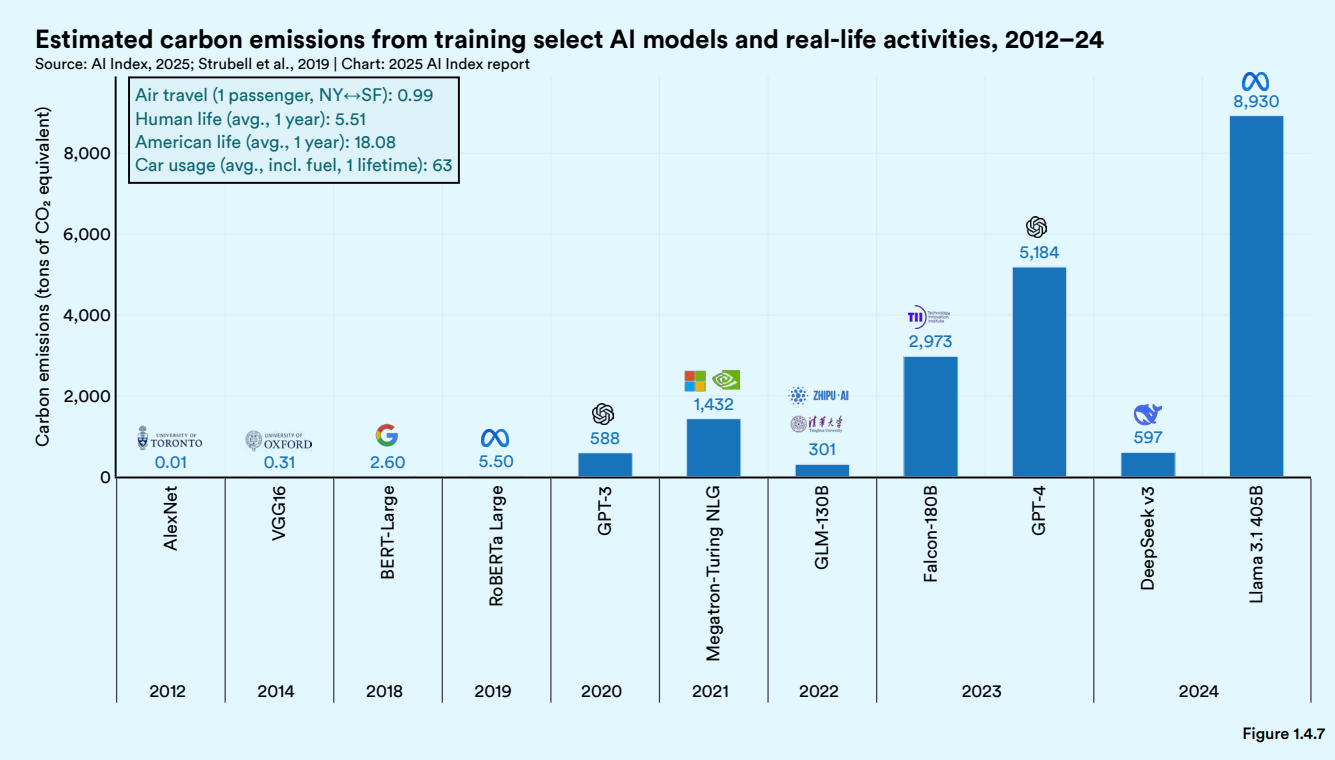

And lastly, the statistics we’ve shared, taken from the AI Index report by Standford, which states that on average a human emits 5.51 tons of CO2 in a year, while only in 2024 training of Llama 3.1 emitted 8,930 tons of CO2, opens our eyes to the unfortunate reality: AI does harm the planet.

First, there is a question about how the popular LLMs, such as Claude, GPT and Gemini, were initially trained. We’ve seen a handful of cases of different companies suing their providers for illegally using the data they own for their models training, including German Music Rights Society GEMA against OpenAI, and US authors’ copyright lawsuits against OpenAI and Microsoft.

Second, the recent Stanford study analysed privacy policy of different LLM providers, and found that all of them use users’ chats to train their models.

Of course, most of them have an option to opt out, but many people might find the policy language not clear enough, which would lead to users’ data being exploited without their actual consent.

Predictions about how entry level jobs will not exist, and junior level specialists will be replaced by AI are all over the Internet. Artificial intelligence and automation has already affected many newly graduates lives, and increasing unemployment rates among young people raises yet another ethical question of AI.

While there are some regulations regarding AI use being implemented in the world, for example, EU AI Act, they address the legal side of artificial intelligence, rather than ethical. Of course, some of the legal aspects, like data privacy and security, are also highlighted in ethical AI principles, but this document is more about laws than overall artificial intelligence potential harm.

As we discovered, AI ethics are not regulated anyhow and can be considered rather a matter of conscience. That’s why there are no established rules, but rather recommendations for businesses and individuals that want to use AI ethically, and ensure they don’t do any harm neither to human society, nor to the planet. So, here is what you can do to be on the right side of AI history.

Training your model with high-quality data will not only improve the overall model performance, but also, of course, minimize the chances of biased results. Therefore, invest in data preparation to ensure that you “feed” your model with fresh, diverse and representative data.

Ethically sourced data is the one that doesn’t violate any privacy or copyright laws. That means, whether you take data from your users, buy it from other companies, or get it from open sources – you need to have the full consent from the owners of data.

For example, if you use AI for your customer support chat, each user should be clearly informed that they’ll deal with a bot, not a human customer representative. The same logic applies to you analyzing customer data with artificial intelligence – you need to be open and transparent about it, and disclose all the necessary details of the process.

Recent research by UNESCO and UCL discovered that using smaller models, which are trained and used for specific tasks, rather than large and general-purpose models, can cut energy use up to 90%.

Open-source models allow you to copy their code and architecture into your infrastructure. By doing so, you host a model locally, have full control over its functionality and performance, and therefore increase security.

But data security is not the only advantage of open-source models in the ethical realm. The control you gain when hosting a model yourself allows you to calculate the energy needed for each query, as well as select the data centers in less carbon intense areas, and with more renewable energy sources.

Artificial intelligence does have a carbon footprint, and while for now it’s difficult to make it neutral, you can try to minimize it by calculating the impact, and opting for better, less harmful alternatives.

For this, there is a special tool created by Canadian scientists that can help you estimate your carbon emissions.

We say it in, probably, almost all content pieces we produce: AI implementation requires deep analysis, critical thinking, and strategy, not a blind following of trends. Not only applying AI to the processes it just doesn’t fit may hurt your business and ROI, it also uses all of that resources for nothing but a toy.

Apart from always having a human-in-the-loop for every use of artificial intelligence, prioritizing human expertise, talents and well-being will put your company on the way to a more ethical AI use. After all, it’s just a tool, and not a replacement for people.

Now, you have a full picture of how AI may harm our lives, and what you can do about it. The rest is up to you, but we all know that with great power comes great responsibility.

If you want to share that responsibility with an AI agency that can help you navigate both technical and ethical aspects of AI implementation – you came to the right place. NineTwoThree AI studio has the knowledge of Ph.D-level AI engineers, and the belief in a more secure, and sustainable AI future. So, without further ado, here is the link to contact us.

People don’t have clean water because they’re living next to OpenAI data centers, training of Llama 3.1 created CO2 emissions of roughly 133 lifetimes of a car, while unemployment could hit 25% among recent grads – all this news makes us wonder whether using AI is justified. And, spoiler, not all use.

As with any technology in the world, when we start considering adding it everywhere, we need to pause, reflect, and see what possible consequences it may have.

So, this blog post invites you to do just that: to be informed of what ethical dilemmas AI raises, how we can minimize its harm on our environment and society, and how to be on the right side of history with your AI implementation.

Simply put, Ethical AI is the use of artificial intelligence that doesn’t harm neither humans, nor the planet. When getting into details, the AI ethics has many aspects, which one or another way highlight the possibility of bad influence this technology has on the world, as well as the practicality and purpose of its use. Main principles include:

Those are the foundation of AI Ethics by UNESCO, and considered to be the golden standard for anyone who wants to use or implement artificial intelligence ethically.

The other variations of ethical AI principles are also described by Harvard University, where they distinguish five of them: fairness, transparency, accountability, privacy and security.

A few years ago, when AI was still some sort of laboratory miracle rather than an everyday necessity of every single human being, ethical AI was mostly around data privacy, and how such industries, like Healthcare, can implement AI without corrupting anyone's personal information.

Nowadays, when we have AI more accessible, and there is literally no company that doesn’t have an “AI” stage in their business strategy, the world came to a realization that there are more and more ethical questions around it.

The first, and the oldest concern is that AI can produce biased results, which potentially can harm society. For example, if a company uses AI to review applications for a manager's position, the technology may prioritize male candidates, since due to historical inequality and prejudices around female managers, the data might suggest that it would be a better option.

Generative AI may hallucinate and create inaccurate content. This may lead to disinformation, flawed decision-making, and, eventually, harmful consequences for people using the tool, and the business providing it.

Recent researches showed that usage of generative AI requires a lot of electricity, water, and produces massive amounts of CO2 emissions.

For example, the report by the International Energy Agency explains how “a 100 MW data centre can consume as much electricity as 100 000 households”.

The IMD report, collected fully out of the annual reports of big companies powering AI, shares the unbelievably huge water and power consumption of Google, Microsoft and Meta.

The news report we’ve mentioned in the introduction about how people living next to OpenAI data centers have no access to clean water, raises concerns of co-existing of humans next to AI.

This is also backed by “Energy and AI” report data, which states that the proximity of data centers, which AI requires an awful lot, to the urban areas is the closest of all other facilities.

And lastly, the statistics we’ve shared, taken from the AI Index report by Standford, which states that on average a human emits 5.51 tons of CO2 in a year, while only in 2024 training of Llama 3.1 emitted 8,930 tons of CO2, opens our eyes to the unfortunate reality: AI does harm the planet.

First, there is a question about how the popular LLMs, such as Claude, GPT and Gemini, were initially trained. We’ve seen a handful of cases of different companies suing their providers for illegally using the data they own for their models training, including German Music Rights Society GEMA against OpenAI, and US authors’ copyright lawsuits against OpenAI and Microsoft.

Second, the recent Stanford study analysed privacy policy of different LLM providers, and found that all of them use users’ chats to train their models.

Of course, most of them have an option to opt out, but many people might find the policy language not clear enough, which would lead to users’ data being exploited without their actual consent.

Predictions about how entry level jobs will not exist, and junior level specialists will be replaced by AI are all over the Internet. Artificial intelligence and automation has already affected many newly graduates lives, and increasing unemployment rates among young people raises yet another ethical question of AI.

While there are some regulations regarding AI use being implemented in the world, for example, EU AI Act, they address the legal side of artificial intelligence, rather than ethical. Of course, some of the legal aspects, like data privacy and security, are also highlighted in ethical AI principles, but this document is more about laws than overall artificial intelligence potential harm.

As we discovered, AI ethics are not regulated anyhow and can be considered rather a matter of conscience. That’s why there are no established rules, but rather recommendations for businesses and individuals that want to use AI ethically, and ensure they don’t do any harm neither to human society, nor to the planet. So, here is what you can do to be on the right side of AI history.

Training your model with high-quality data will not only improve the overall model performance, but also, of course, minimize the chances of biased results. Therefore, invest in data preparation to ensure that you “feed” your model with fresh, diverse and representative data.

Ethically sourced data is the one that doesn’t violate any privacy or copyright laws. That means, whether you take data from your users, buy it from other companies, or get it from open sources – you need to have the full consent from the owners of data.

For example, if you use AI for your customer support chat, each user should be clearly informed that they’ll deal with a bot, not a human customer representative. The same logic applies to you analyzing customer data with artificial intelligence – you need to be open and transparent about it, and disclose all the necessary details of the process.

Recent research by UNESCO and UCL discovered that using smaller models, which are trained and used for specific tasks, rather than large and general-purpose models, can cut energy use up to 90%.

Open-source models allow you to copy their code and architecture into your infrastructure. By doing so, you host a model locally, have full control over its functionality and performance, and therefore increase security.

But data security is not the only advantage of open-source models in the ethical realm. The control you gain when hosting a model yourself allows you to calculate the energy needed for each query, as well as select the data centers in less carbon intense areas, and with more renewable energy sources.

Artificial intelligence does have a carbon footprint, and while for now it’s difficult to make it neutral, you can try to minimize it by calculating the impact, and opting for better, less harmful alternatives.

For this, there is a special tool created by Canadian scientists that can help you estimate your carbon emissions.

We say it in, probably, almost all content pieces we produce: AI implementation requires deep analysis, critical thinking, and strategy, not a blind following of trends. Not only applying AI to the processes it just doesn’t fit may hurt your business and ROI, it also uses all of that resources for nothing but a toy.

Apart from always having a human-in-the-loop for every use of artificial intelligence, prioritizing human expertise, talents and well-being will put your company on the way to a more ethical AI use. After all, it’s just a tool, and not a replacement for people.

Now, you have a full picture of how AI may harm our lives, and what you can do about it. The rest is up to you, but we all know that with great power comes great responsibility.

If you want to share that responsibility with an AI agency that can help you navigate both technical and ethical aspects of AI implementation – you came to the right place. NineTwoThree AI studio has the knowledge of Ph.D-level AI engineers, and the belief in a more secure, and sustainable AI future. So, without further ado, here is the link to contact us.