In our recent article, Apps in ChatGPT: Higher Conversions or Lost Users, we explored the strategic implications of OpenAI’s shift toward becoming a platform. We debated the business pros and cons, and whether owning the interface beats the massive distribution of the ChatGPT ecosystem.

But strategy is only half the battle. We wanted to see exactly what this looks like in practice.

So, in case you’ve decided that this is a solution for you, or you just want to know in more detail what it takes to get running, here is our technical investigation. Our engineering team took a deep dive into the new Apps SDK to see how third-party developers can embed interactive UI and logic directly inside ChatGPT conversations.

Here is what we found.

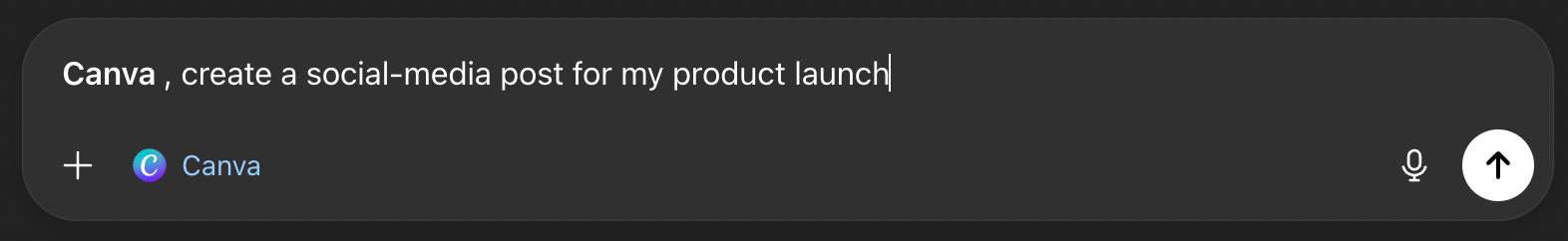

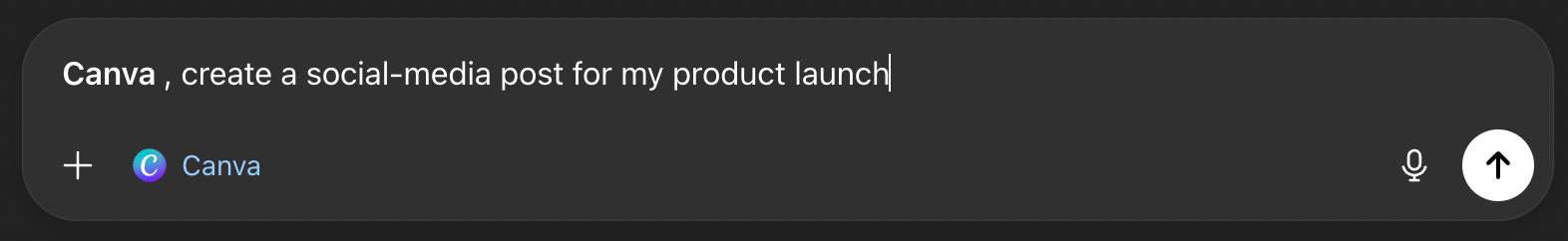

To understand what we are building, we first need to look at ChatGPT Apps from a user’s perspective. Here is how it normally goes: after entering the ChatGPT interface, a user might type something like: ‘Canva, create a social-media post for my product launch’ and the Canva UI appears inside your chat.

As we can see there is a little tag below the text input which shows that ChatGPT recognized an available app from the user's request. You can call the app by prefixing with the app name or ChatGPT can suggest the app during the conversation.

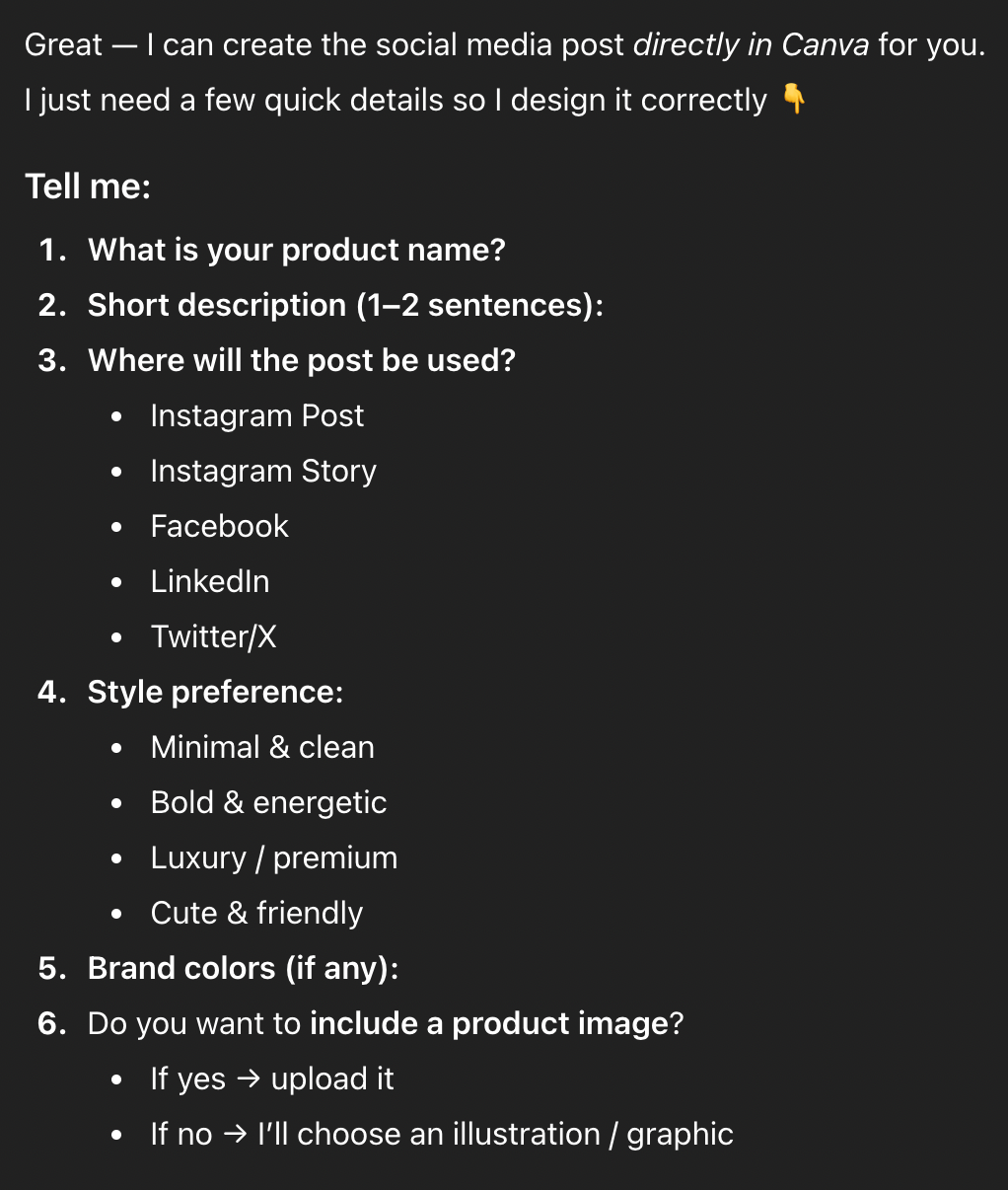

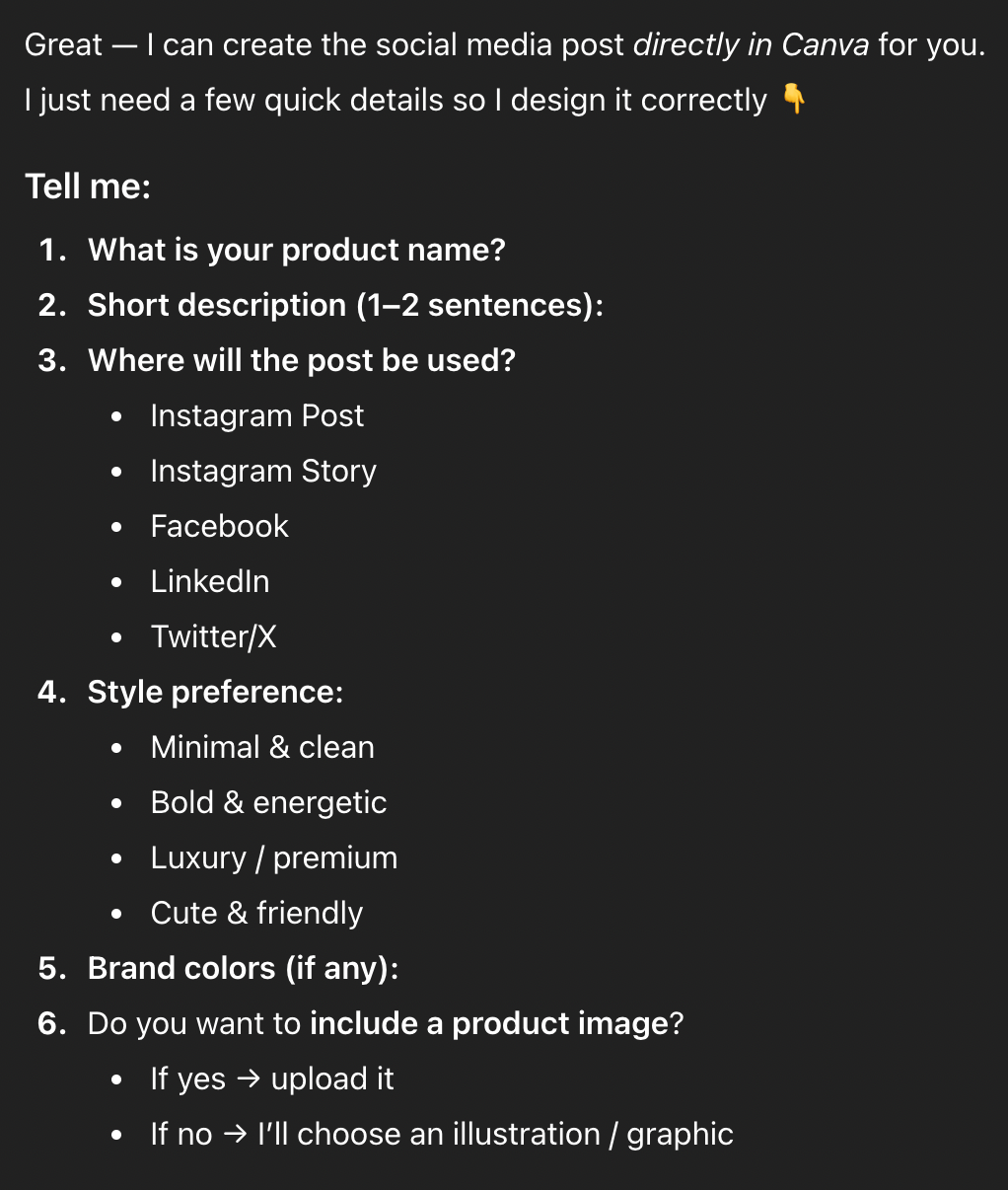

ChatGPT asked to fill the minimum needed information to be able to start a new product.

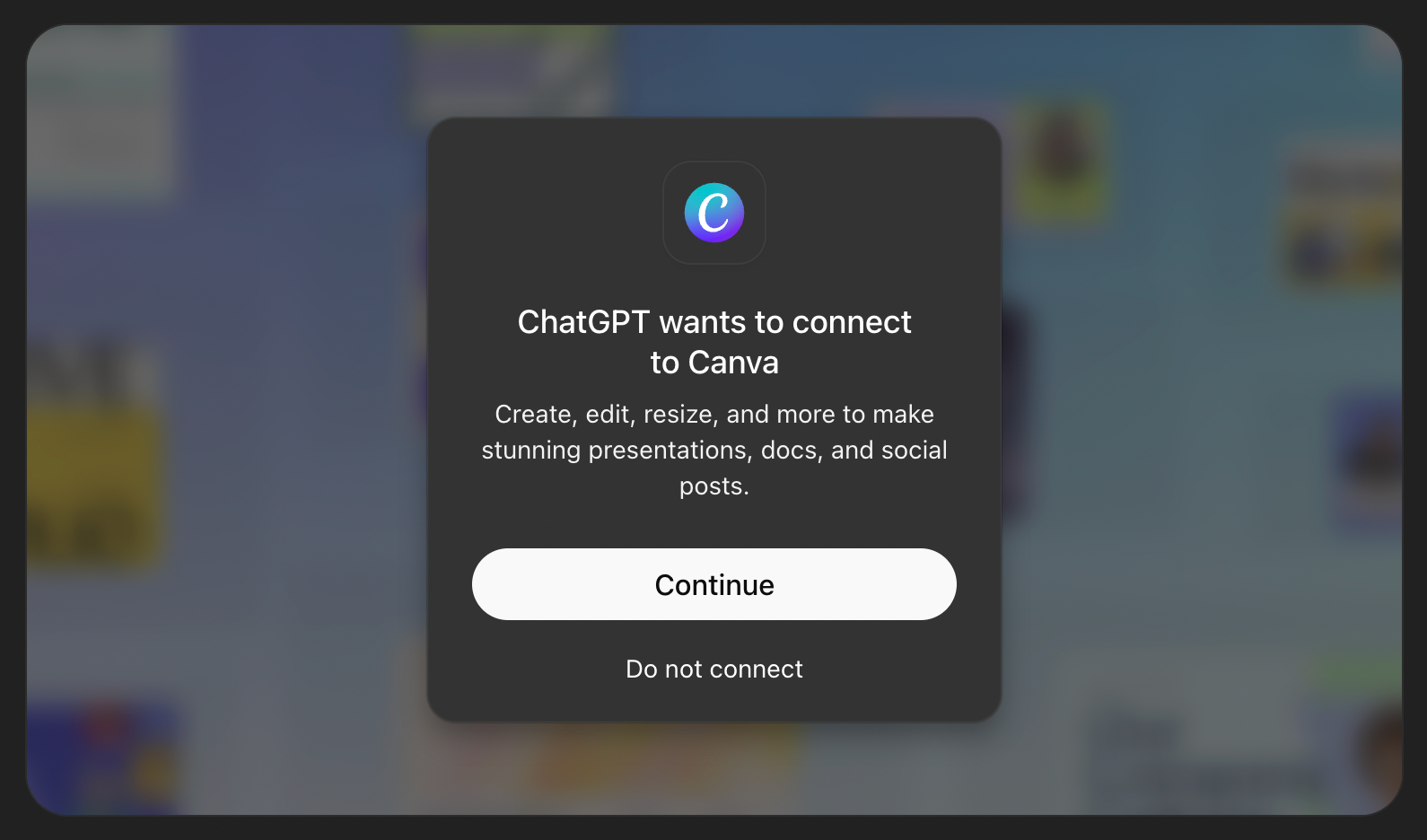

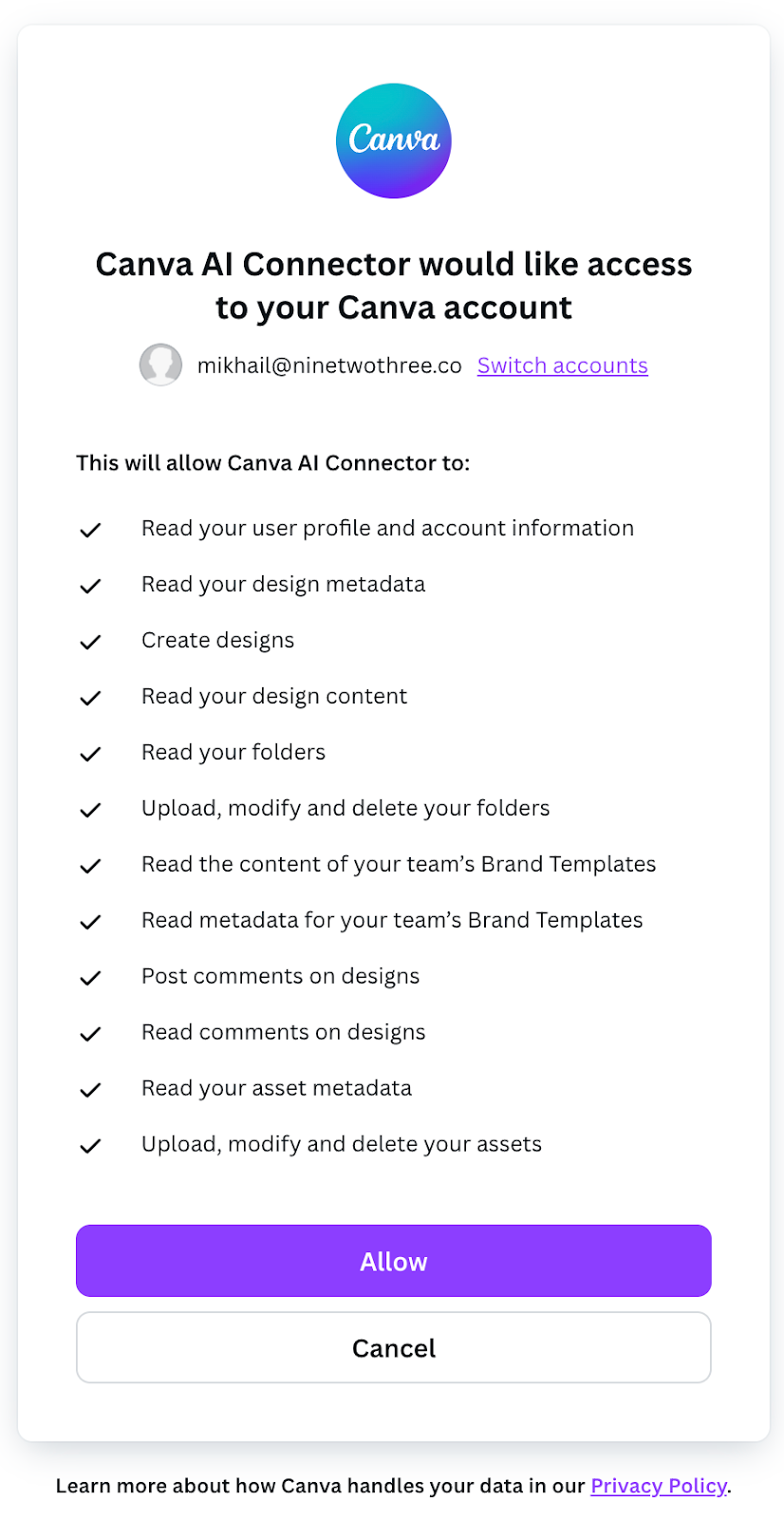

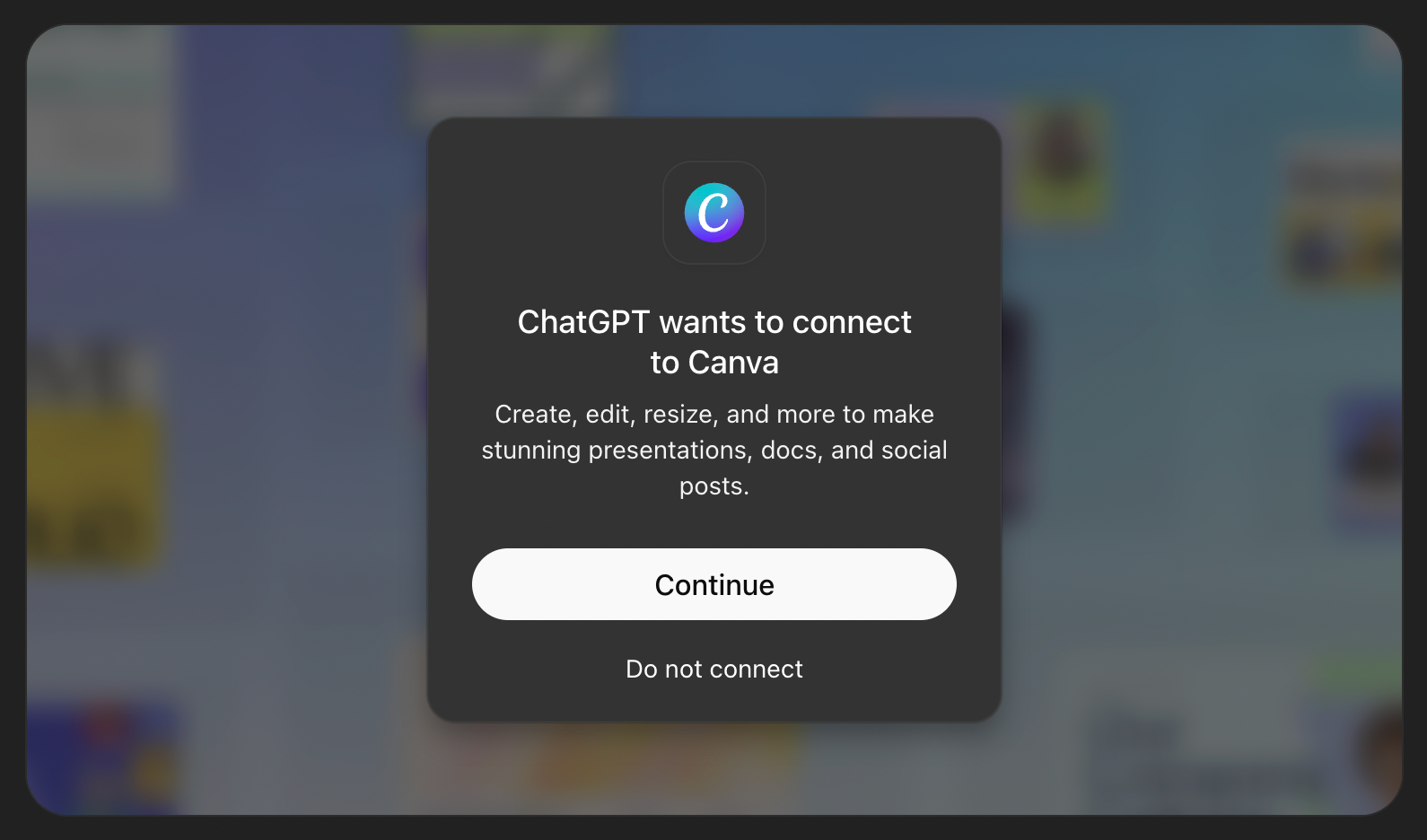

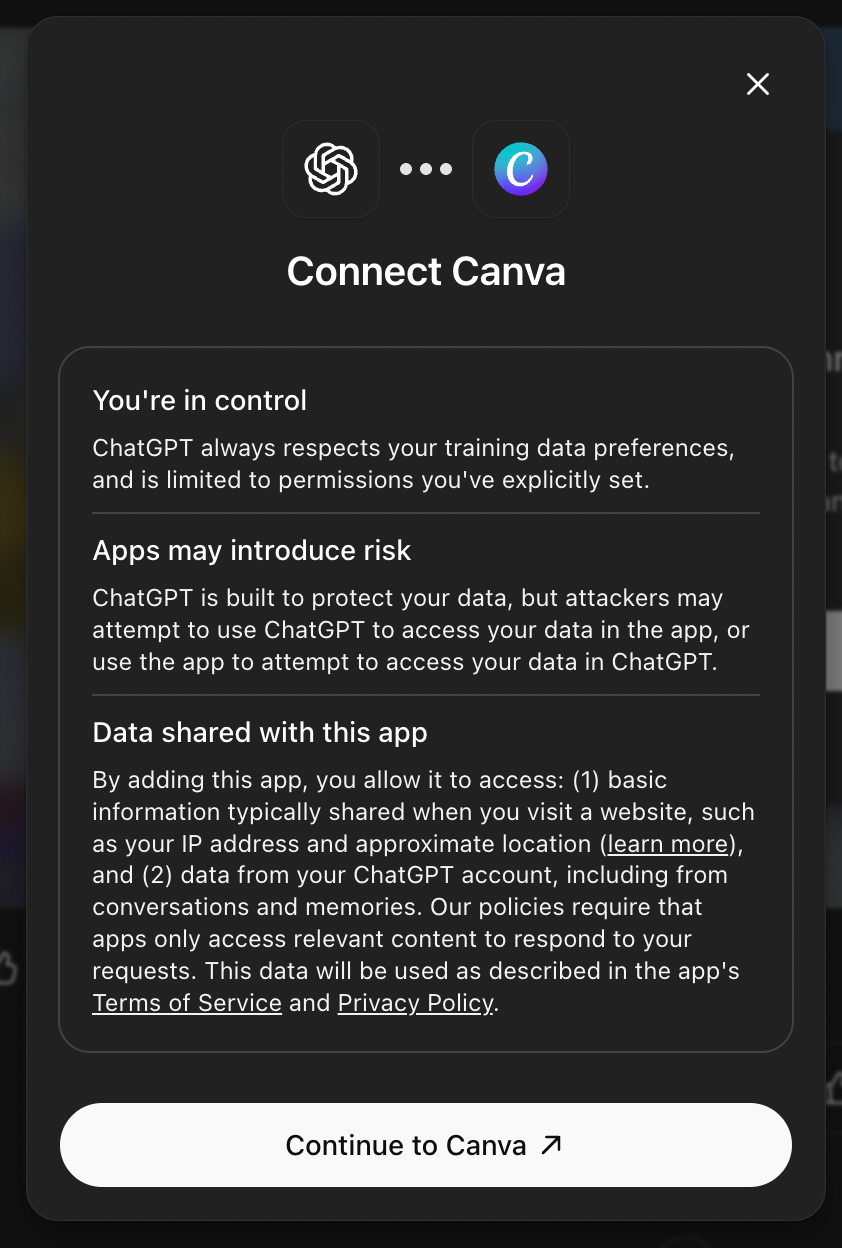

After being told to fill everything by itself and create something cool and totally random, it wanted to connect to the user's Canva account to be able to save the results.

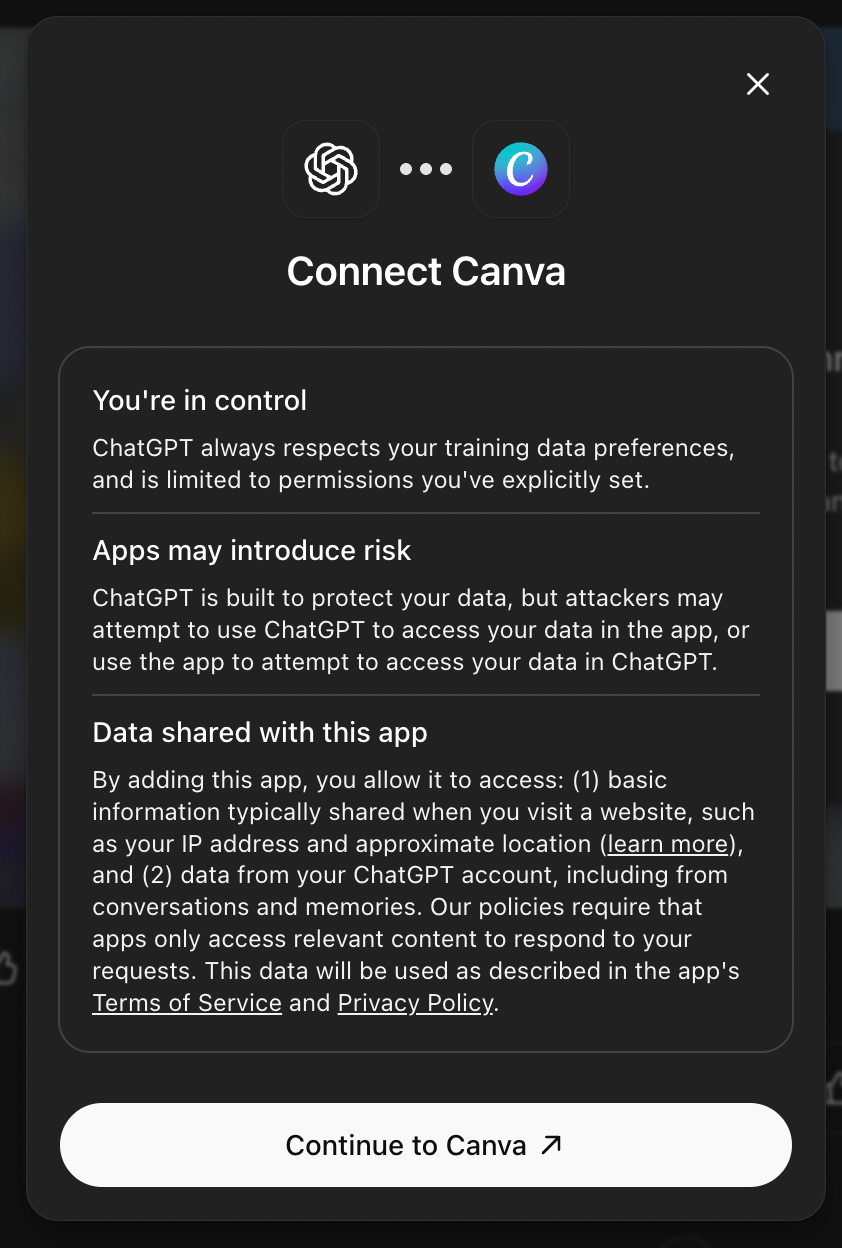

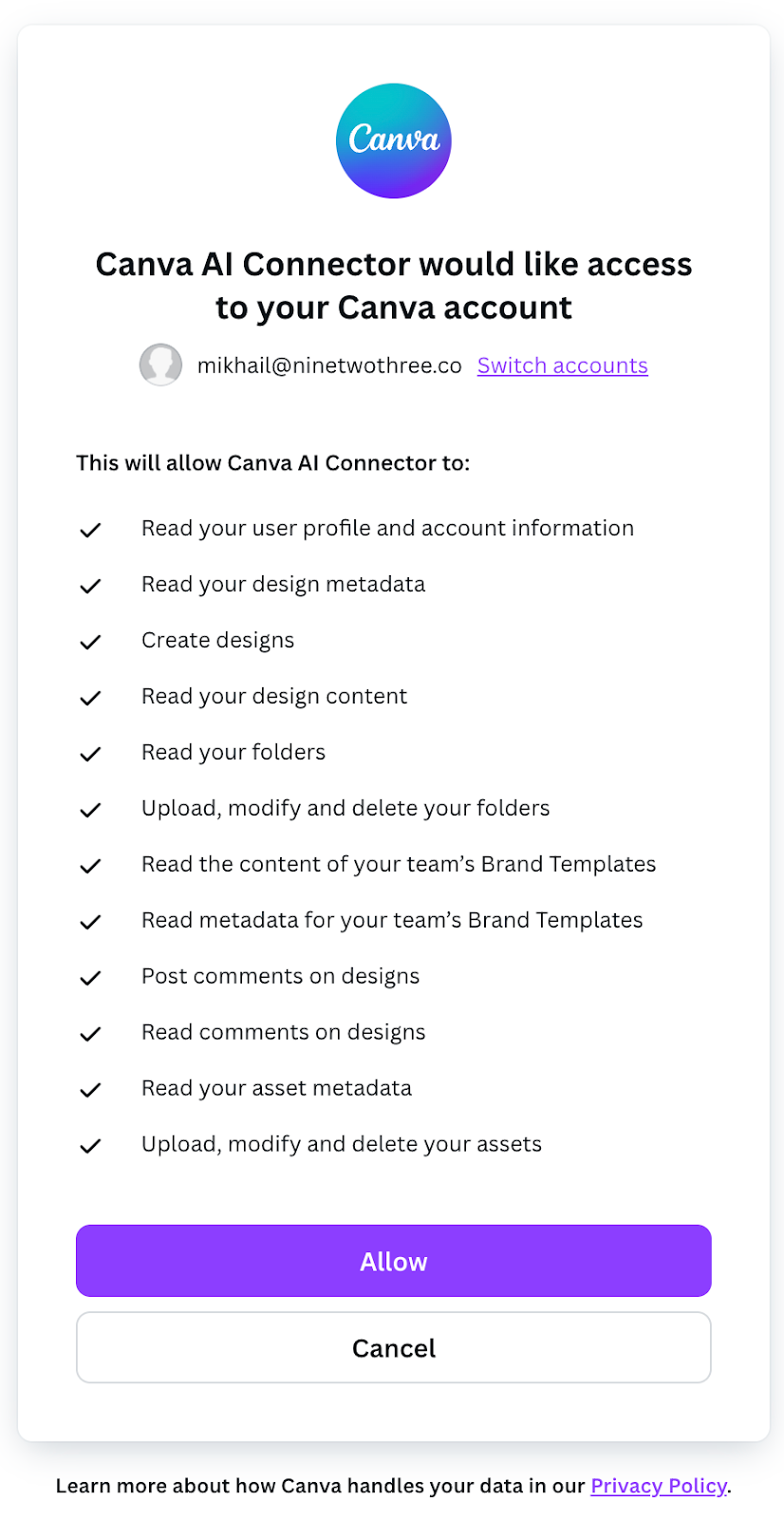

After giving some permissions Canva is finally connected to the ChatGPT and can start producing example data.

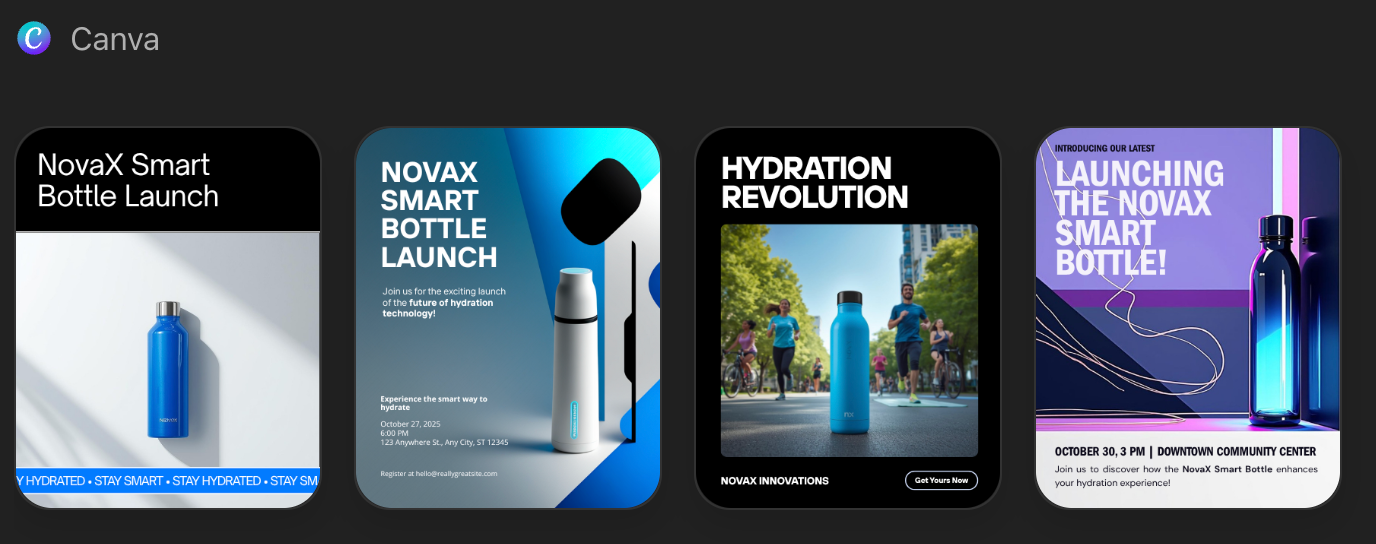

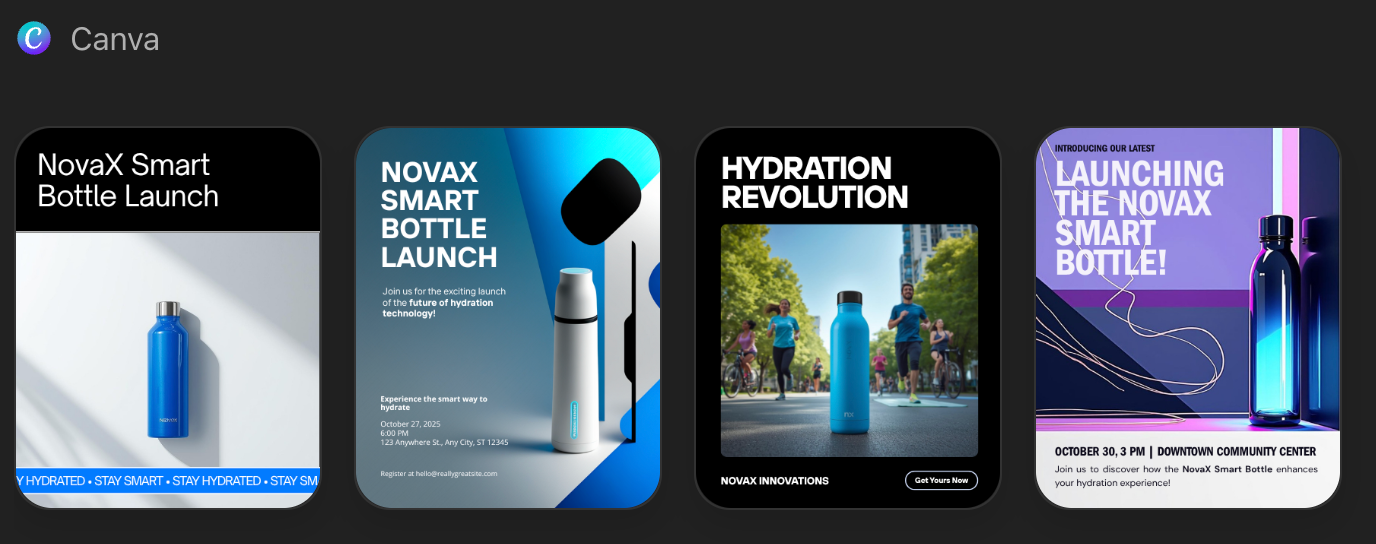

Here Canva created a few examples for the post called ‘NovaX Smart Bottle’, a new smart water bottle (style: bold & energetic, palette: electric blue / black / white). It provided design input for the product:

As you could see, ChatGPT apps are web-based applications embedded within the ChatGPT interface. That is possible using the Apps SDK: developers provide a web UI and backend logic, while ChatGPT handles the conversational orchestration. This allows your app to respond to natural language commands and interact seamlessly with the user within chat.

But first let’s start with the things you need to know before we proceed with the step-by-step implementation journey.

The Model Context Protocol (MCP) server is the foundation of every Apps SDK integration. It exposes tools that the model can call, enforces authentication, and packages the structured data plus component HTML that the ChatGPT client renders inline. Developers can choose between two official SDKs:

These SDKs support frameworks like FastAPI (Python) and Express (TypeScript).

In Apps SDK, tools are the contract between your MCP server and the model. They describe what the connector can do, how to call it, and what data comes back. A well-defined tool includes:

UI components turn structured tool results into a human-friendly interface. Apps SDK components are typically React components that run inside an iframe, communicate with the host via the window.openai API, and render inline with the conversation. These components should be lightweight, responsive, and focused on a single task.

Developing a ChatGPT app begins with setting up a server that leverages one of the official SDKs — Python or TypeScript — typically using frameworks such as FastAPI or Express. This server, often referred to as the MCP server, acts as the bridge between ChatGPT and application logic. Within this environment, developers define tools, which are structured descriptors that outline the actions the app can perform, including the expected input and output formats. These tools form the contract between the app and the model, allowing ChatGPT to invoke the correct functionality based on user commands.

The frontend is implemented as a web-based UI that is embedded within ChatGPT’s chat interface. This UI is usually built with frameworks like React and communicates with the host through the window.openai API. The design of the UI should prioritize responsiveness and simplicity, ensuring a smooth experience for users on both desktop and mobile devices. Authentication mechanisms must also be integrated to verify user identity and maintain session context. Finally, persistent state management ensures that relevant user data and interactions can be stored securely, enabling multi-step or context-sensitive workflows.

Once the app is developed, deploying it requires careful attention to platform requirements and security standards:

Security and privacy are critical considerations when building ChatGPT apps:

Testing a ChatGPT app involves both functional validation and performance monitoring.

ChatGPT apps are inherently web-based and run inside the ChatGPT interface; it is not currently possible to embed fully native mobile code directly within the ChatGPT mobile app. This design has important implications for developers targeting iOS, Android, or cross-platform frameworks such as React Native.

To provide a seamless experience across mobile devices, developers must build a responsive web UI for their app. This UI should adapt to varying screen sizes and orientations, ensuring usability on smartphones and tablets. CSS frameworks, such as Tailwind or Bootstrap, or front-end frameworks like React, Vue, or Svelte, can help achieve a mobile-friendly design while keeping the interface lightweight enough to render smoothly inside ChatGPT.

For teams that already maintain a mobile app — such as a React Native application — ChatGPT apps can serve as a subset or extension of the full-feature app. Users may start with the ChatGPT-embedded web interface and, if additional functionality is required, transition seamlessly to the native app via deep linking. This approach allows developers to leverage the ChatGPT user base while still offering richer experiences in their native apps.

There are, however, some constraints and considerations to be aware of:

Overall, the recommended approach is to treat the ChatGPT app as a mobile-accessible mini-app that provides core functionality, while using deep links or integration with your native app to deliver more advanced features. This hybrid strategy maximizes reach within ChatGPT while preserving the richness and performance of your full mobile experience.

OpenAI is exploring monetization for apps in ChatGPT:

But there are some caveats. Policies are still developing. OpenAI will provide updated announcements later. So, built-in monetization flows may not be immediately available.

This chapter outlines the steps taken to test the Pizza app example from the OpenAI Apps SDK Examples repository. The process served as a proof of concept (PoC) to evaluate the integration of custom widgets and tools within ChatGPT.

The initial step involved cloning the official OpenAI Apps SDK Examples repository:

git clone https://github.com/openai/openai-apps-sdk-examples.git

cd openai-apps-sdk-examples

Subsequently, the necessary dependencies were installed using pnpm:

pnpm install

pre-commit install

These commands set up the required packages for both the frontend components and the backend server.

Before starting the server, it was essential to build the frontend assets. This was accomplished by running:

pnpm run buildThis command generated the necessary HTML, JavaScript, and CSS files within the assets/ directory, which are crucial for rendering the widgets in ChatGPT.

With the frontend assets in place, the next step was to start the backend server using:

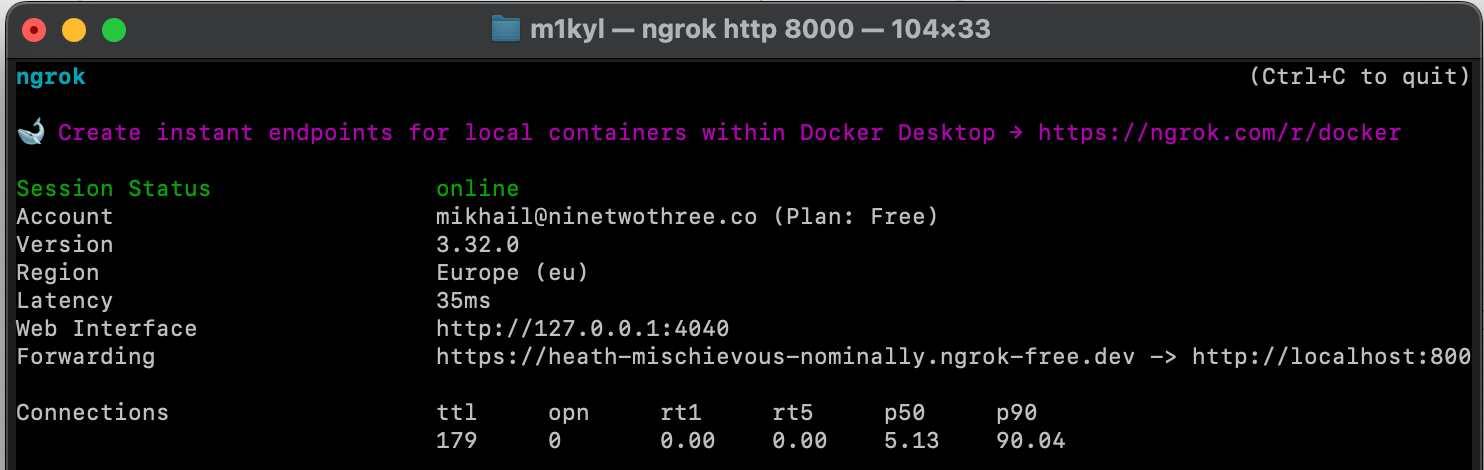

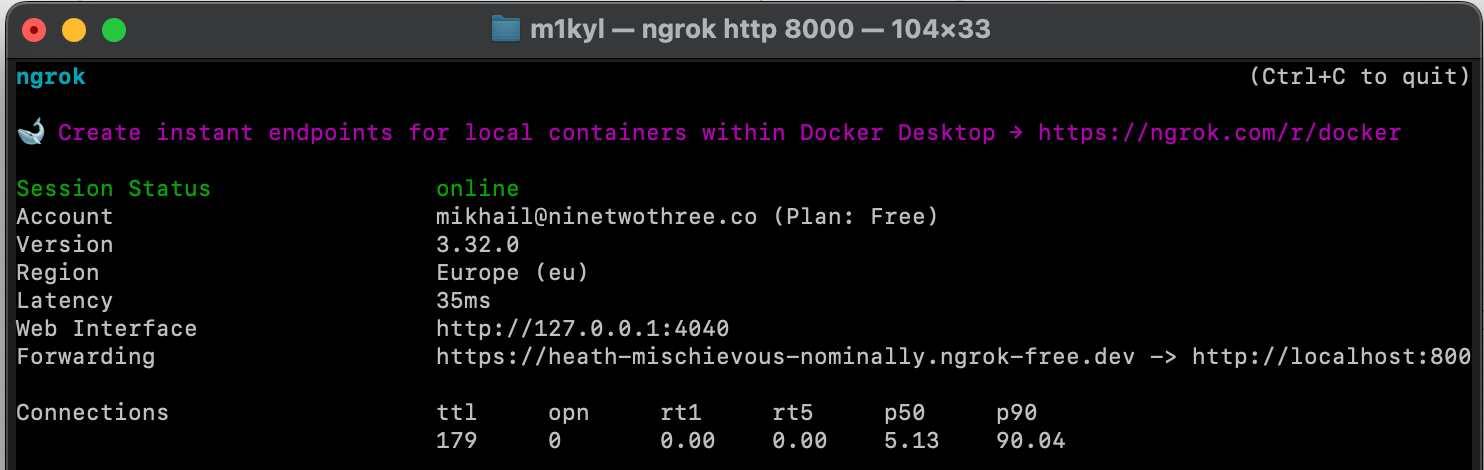

pnpm startTo allow ChatGPT to communicate with the locally running server, it was necessary to expose the server to the internet. This was achieved using ngrok, a tool that creates a secure tunnel to localhost:

ngrok http 8000

Ngrok provided a public URL that forwarded requests to the local server:

https://heath-mischievous-nominally.ngrok-free.dev

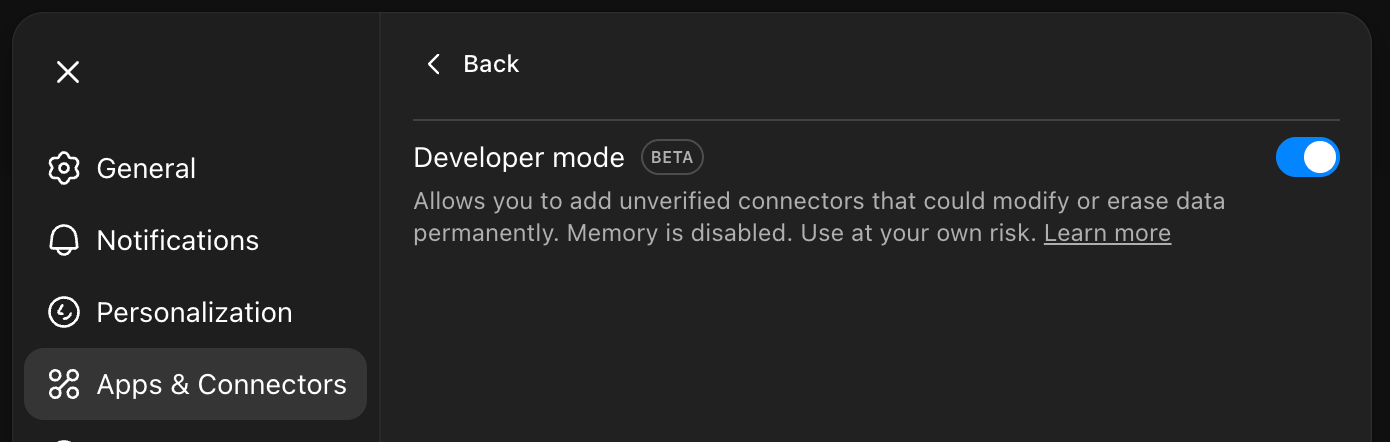

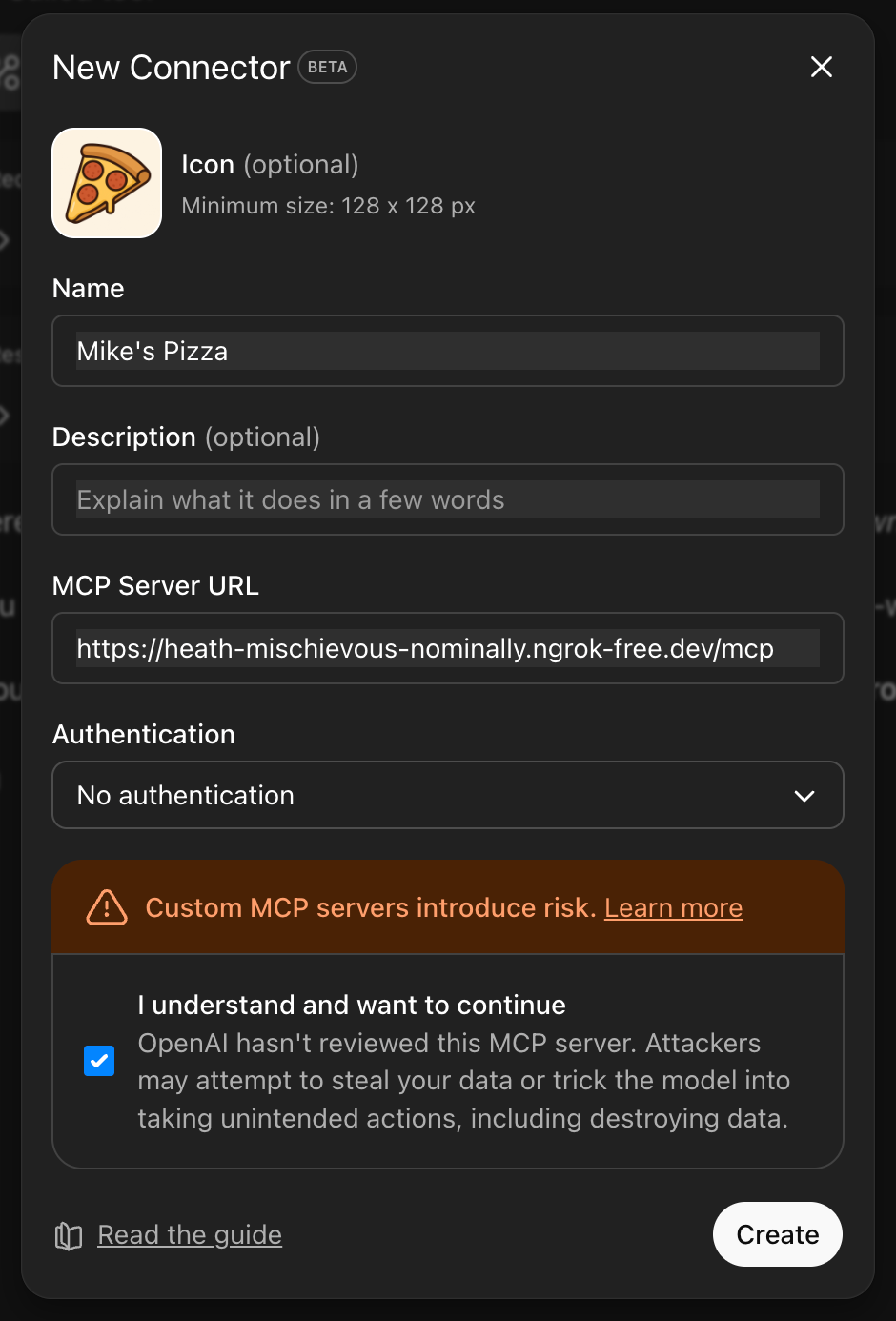

With the server accessible via a public URL, the next step was to configure ChatGPT to recognize the custom app:

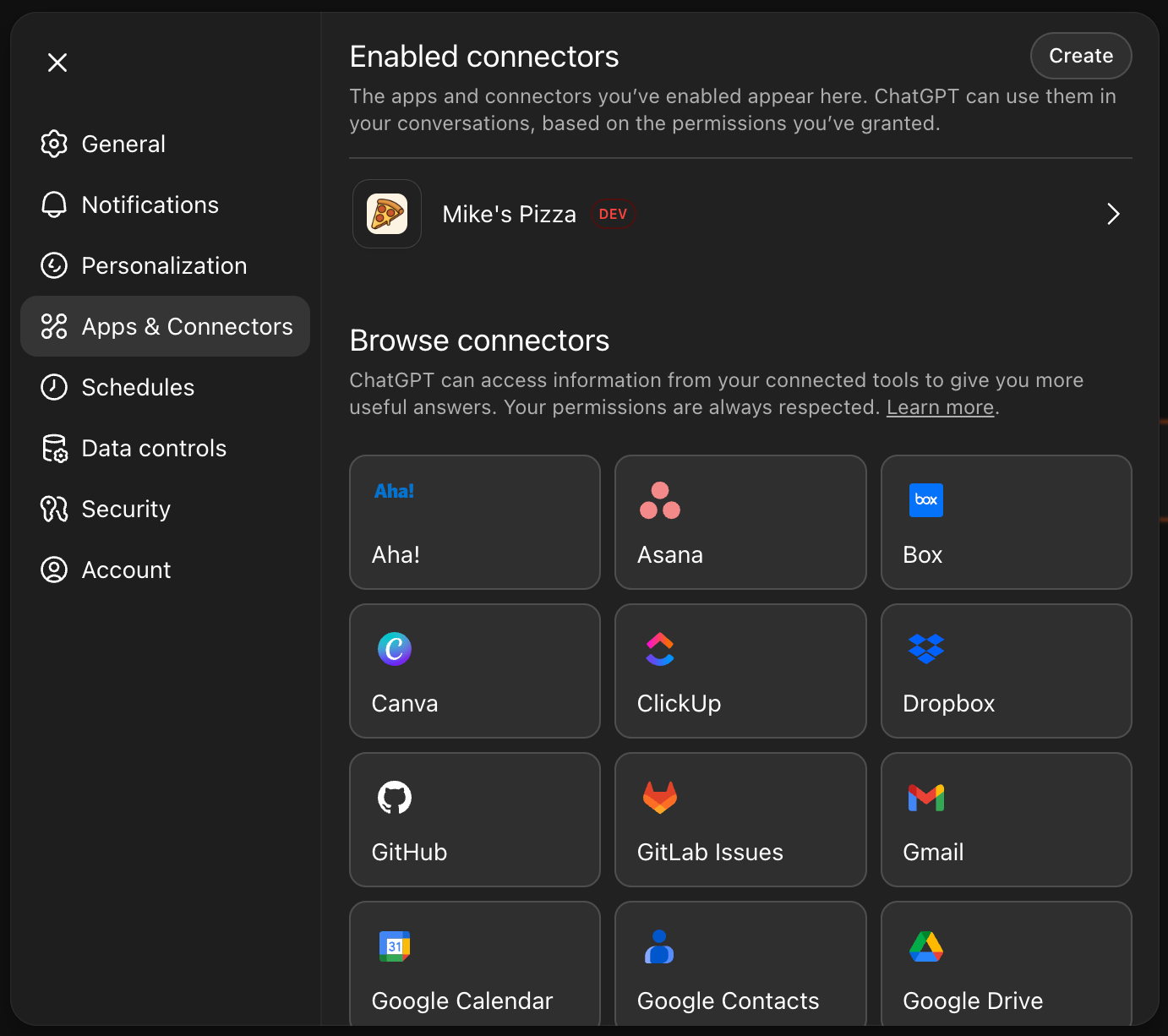

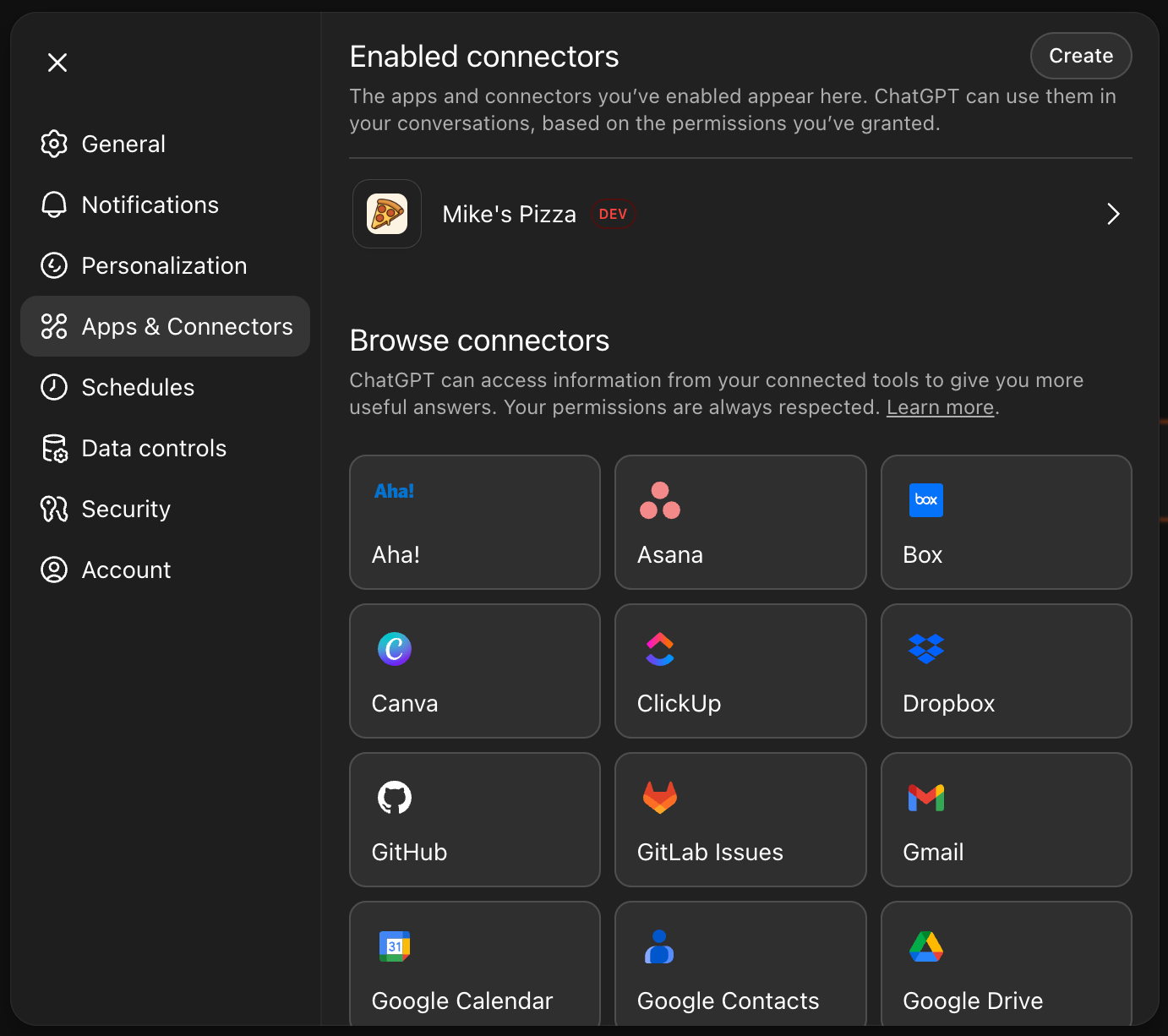

After that the app is listed on enabled connectors list:

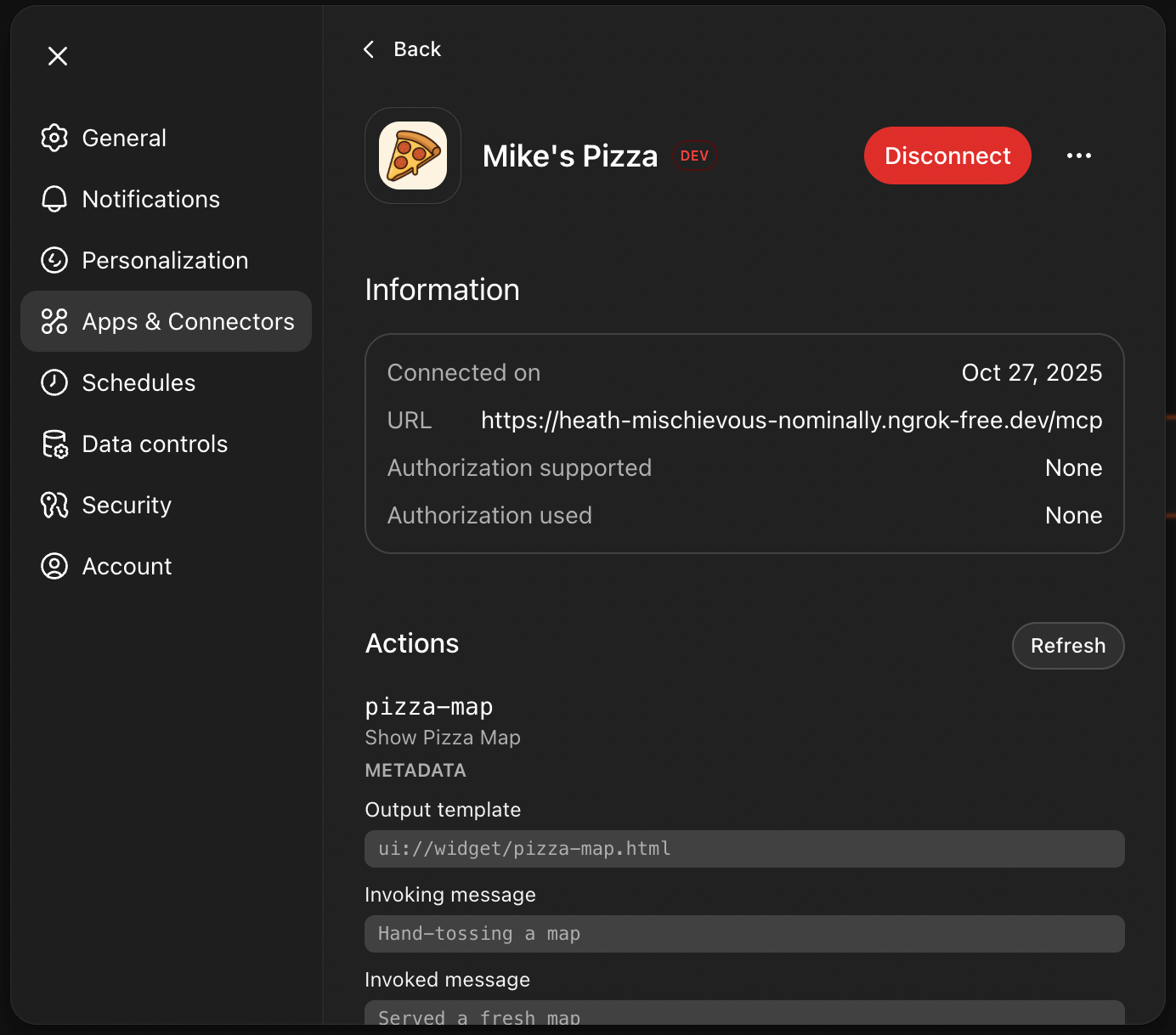

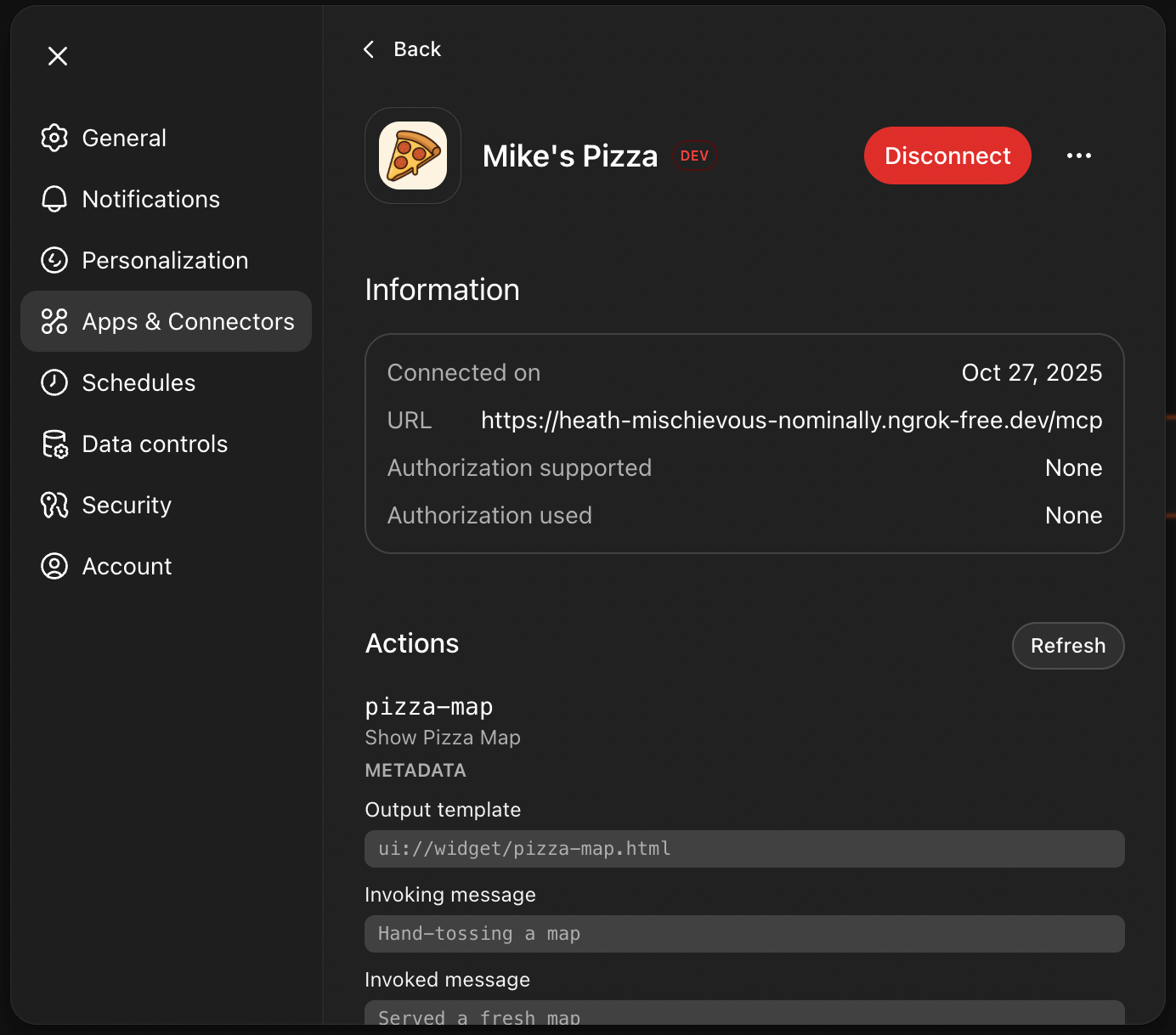

Also, in app details there is the list of actions this app supports.

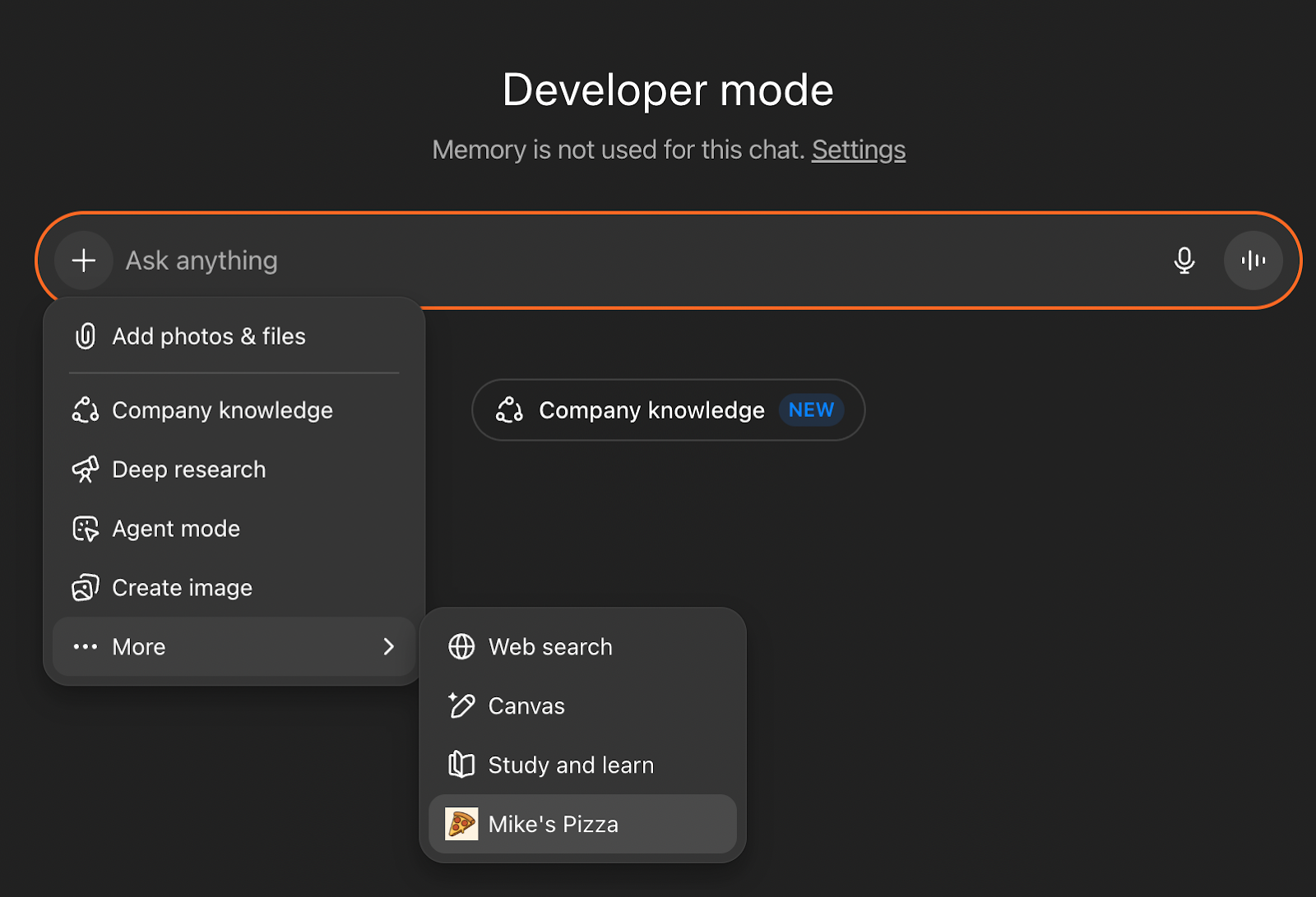

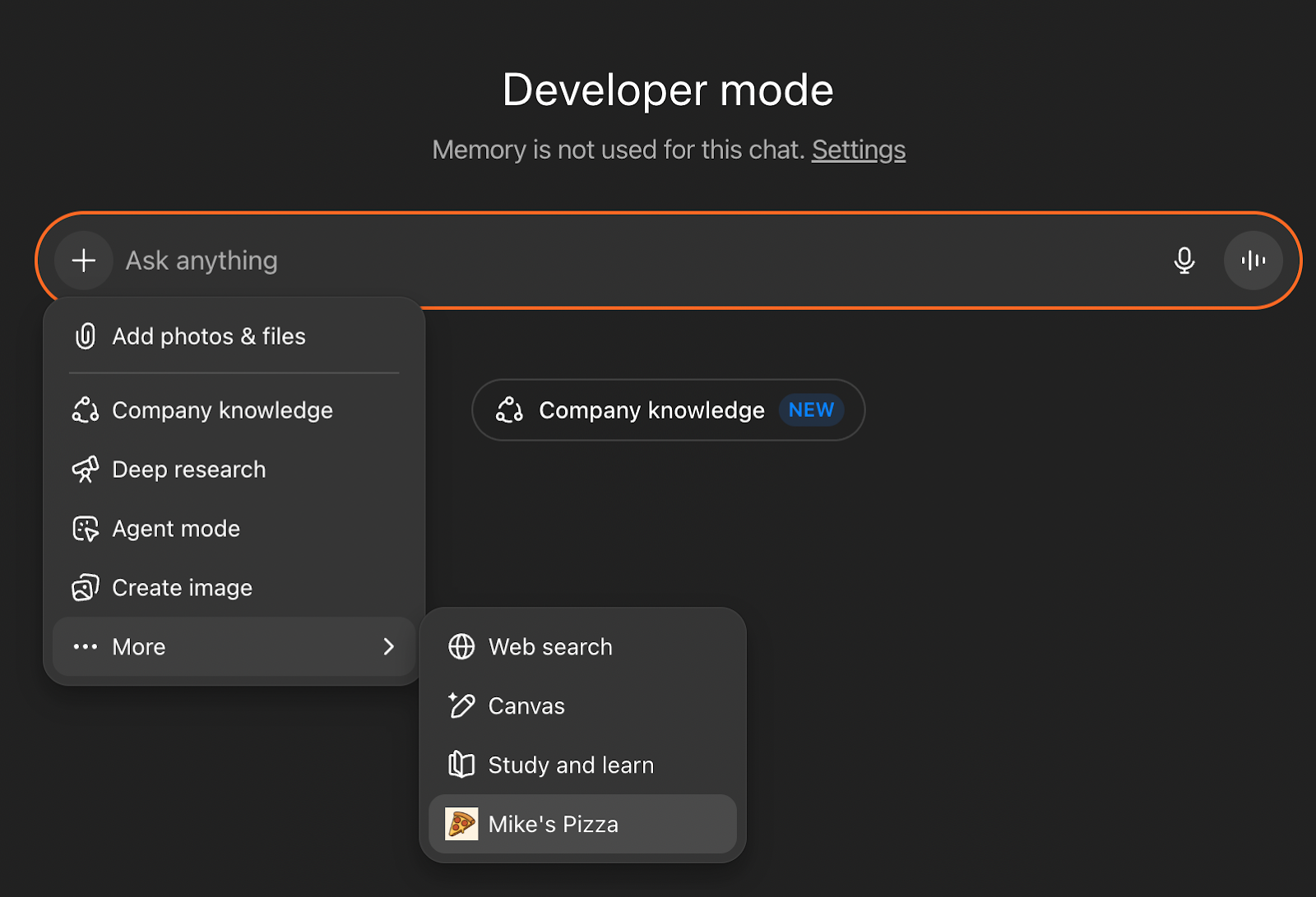

After setting up the connector, the app is ready for interaction. In the nearly created chat in the ‘plus’ menu appears ‘More’ menu where Pizza app can be selected.

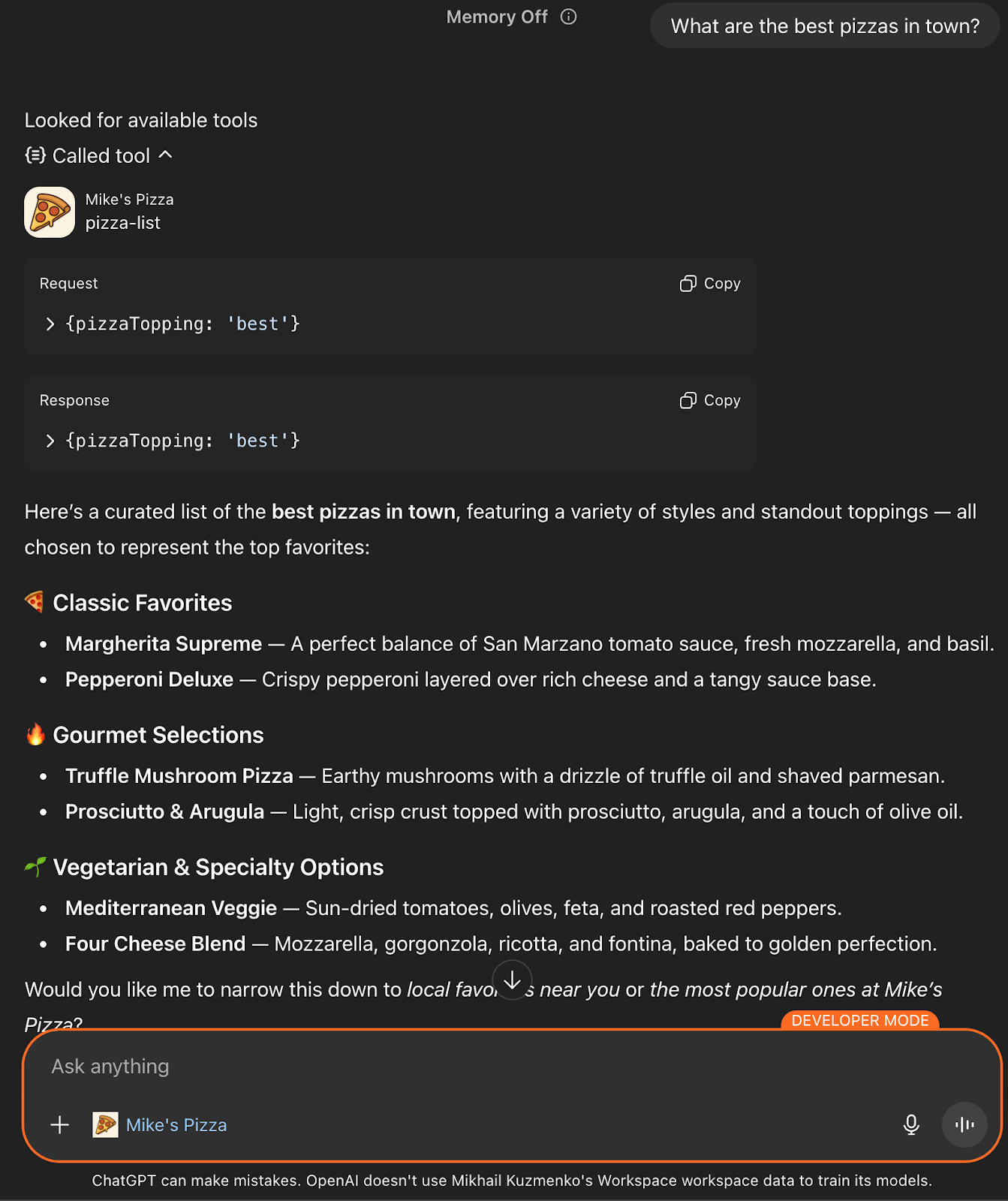

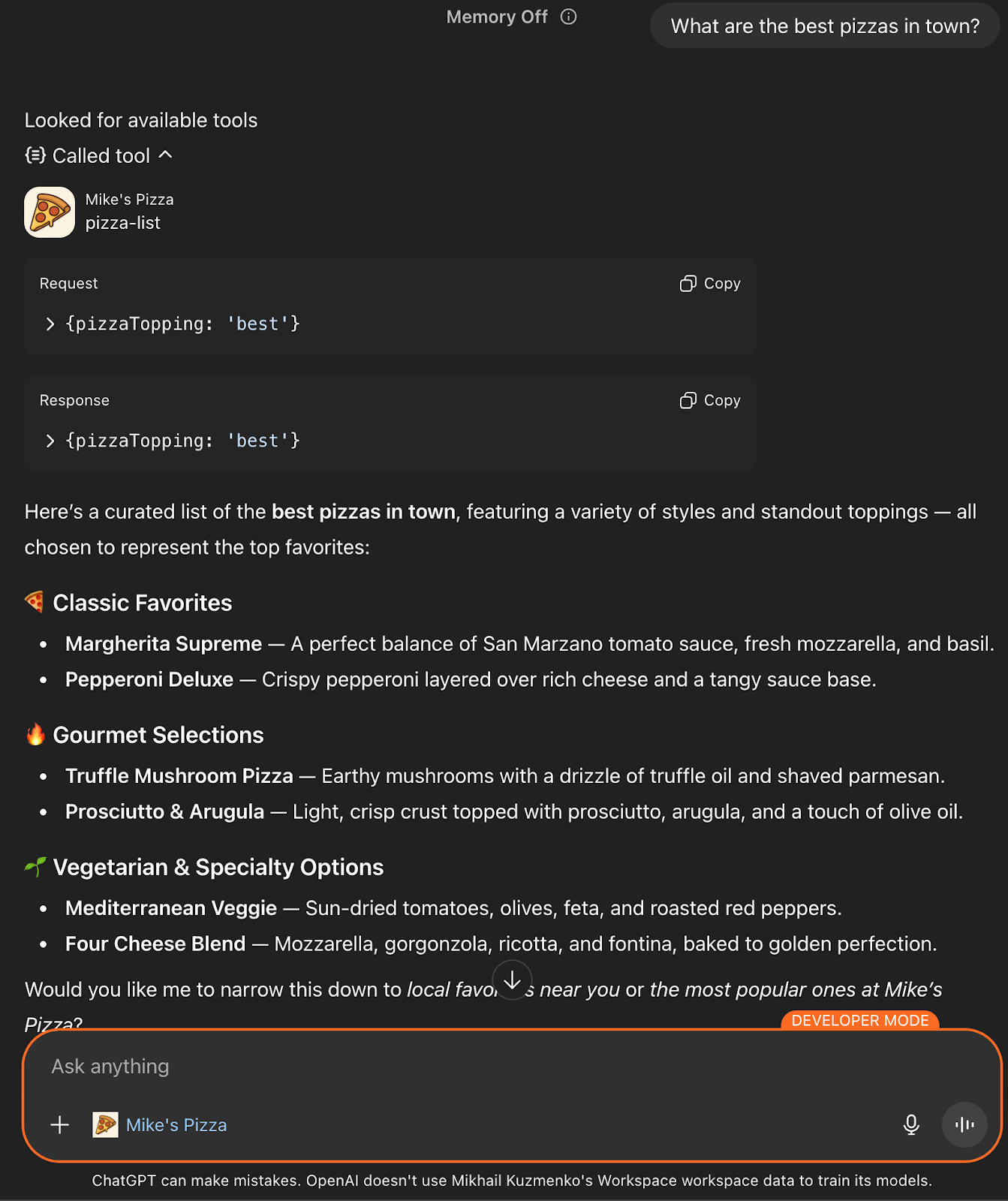

Now ChatGPT can be asked with the following questions:

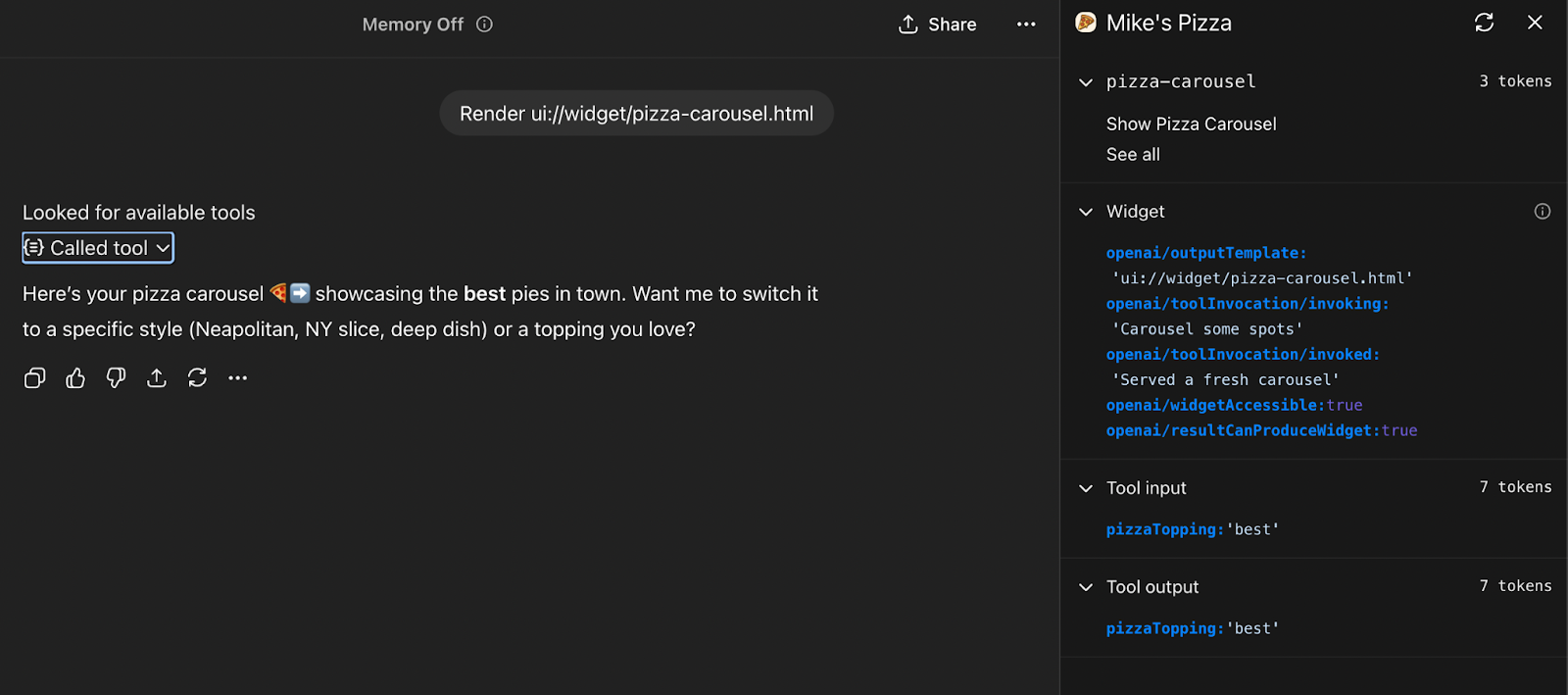

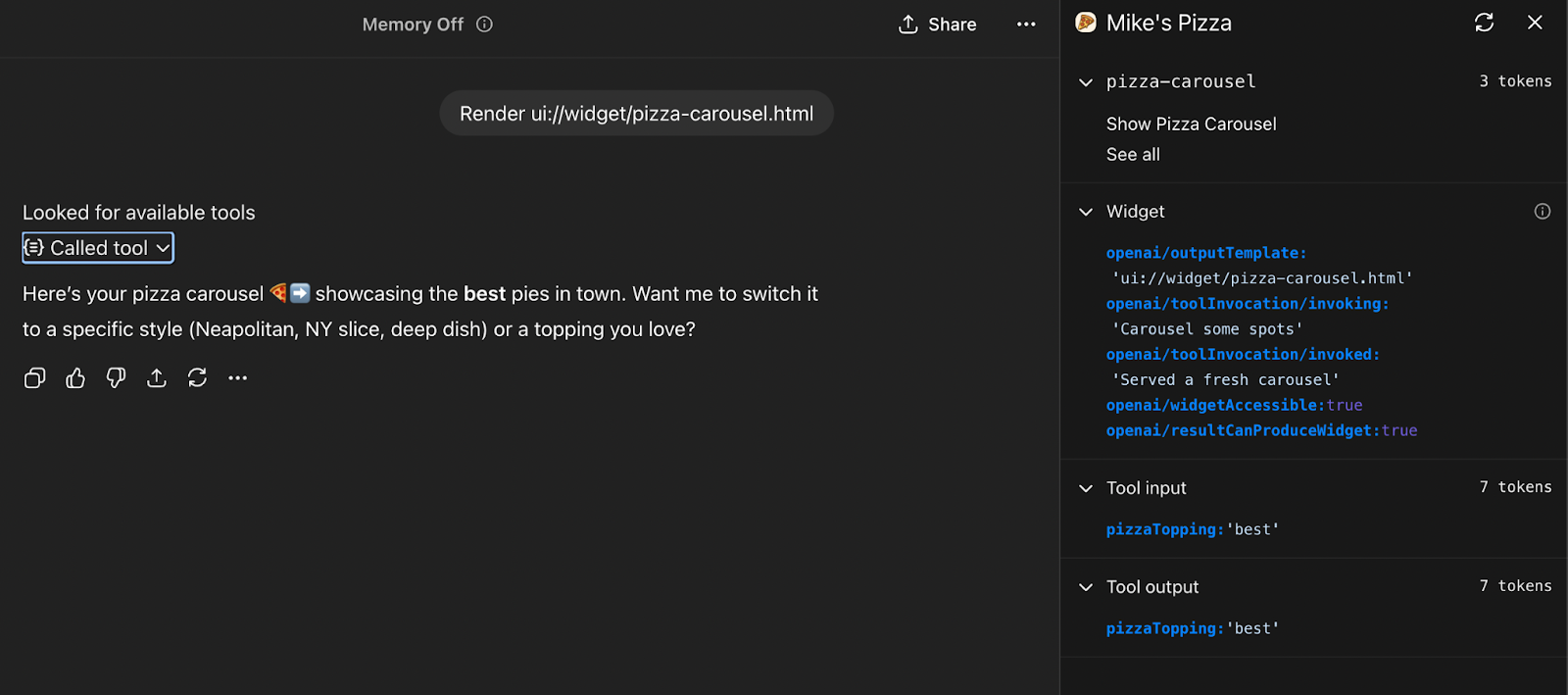

ChatGPT responded by invoking the appropriate tools from the Pizza app, rendering interactive widgets like carousels and maps directly within the chat interface.

Images aren’t shown in the chat because not all accounts are granted early access to test widgets in their apps, but if ChatGPT is asked directly to render a pizza carousel widget, it will display the result confirming that the logic is working correctly.

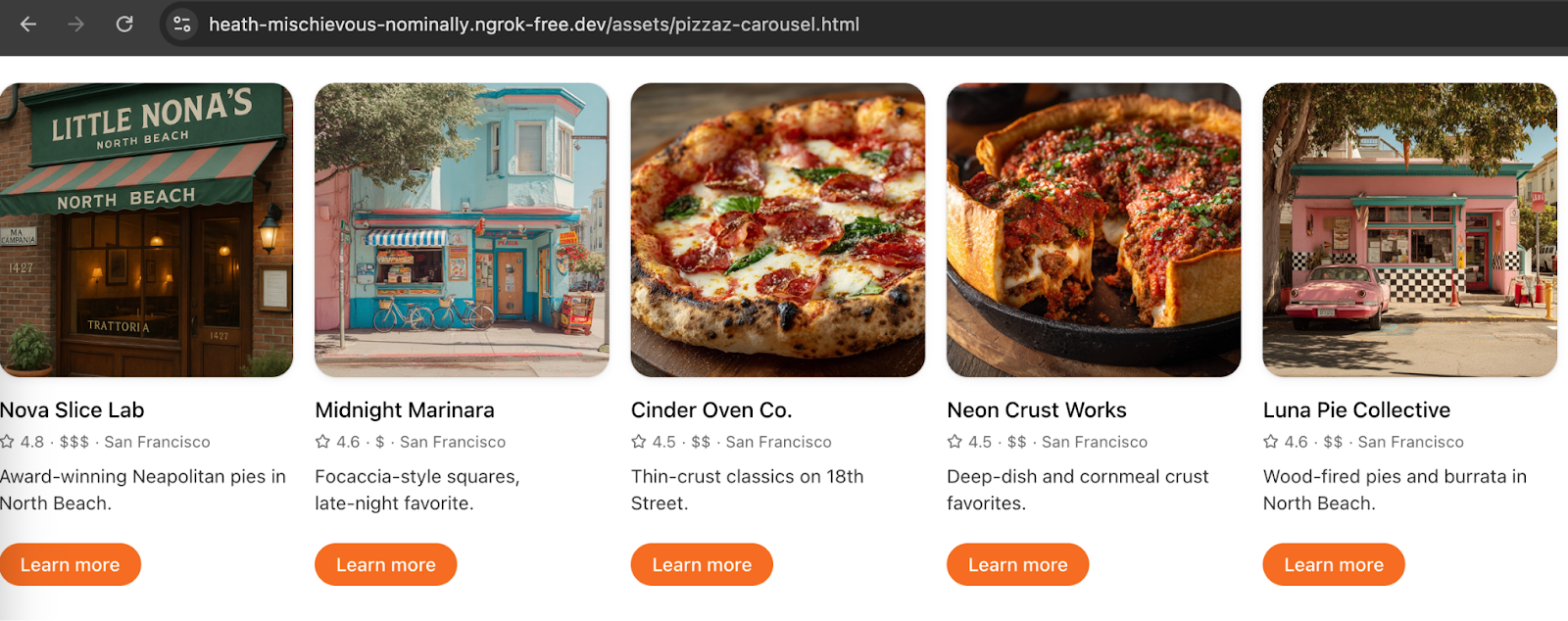

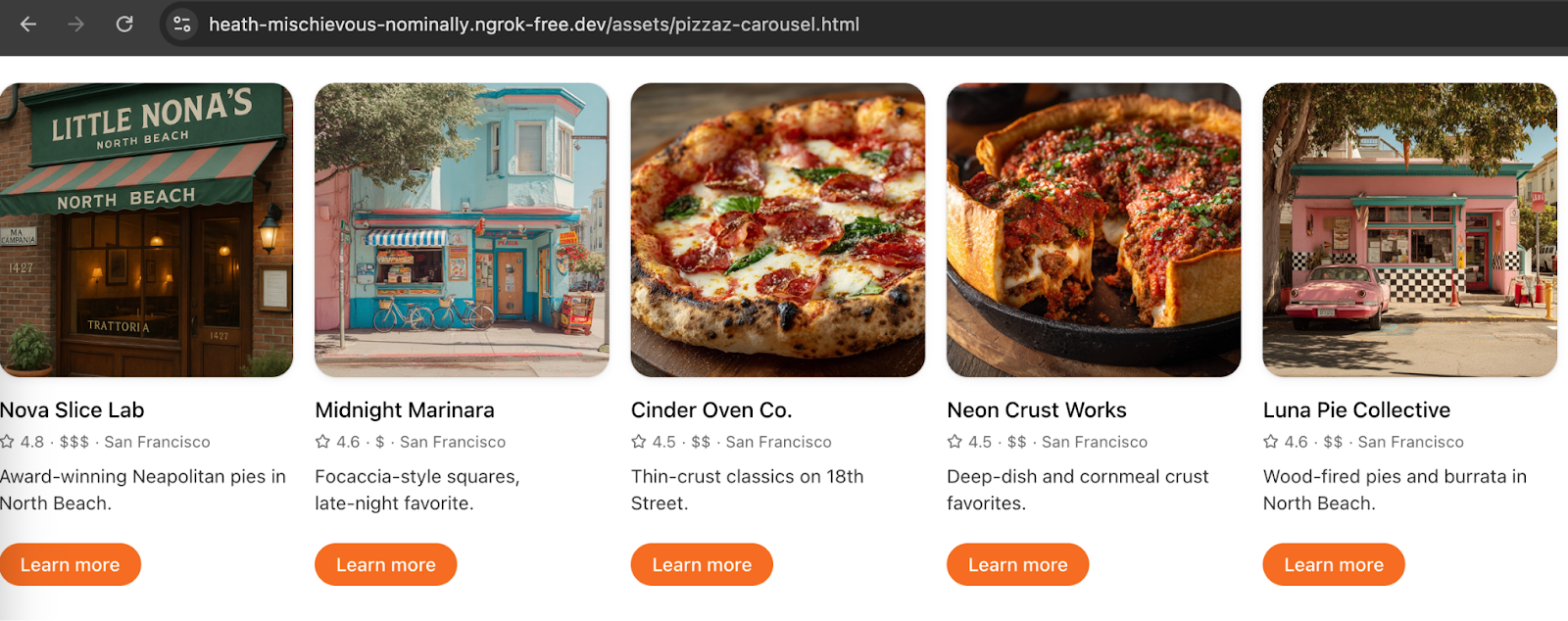

Pizza carousel widget html can be opened in the web browser to make sure everything is working and rendering correctly.

Our testing process provided valuable insights into the integration of custom apps within ChatGPT:

The Apps SDK proves that ChatGPT is rapidly evolving from a chatbot into a platform. Whether you are looking to drive conversions or simply provide better utility to your users, the technical pathway is now open.

If you saw the "Mike's Pizza" example and thought, "My product needs this," we are ready to execute.

NineTwoThree offers the complete development package for ChatGPT Apps:

Don't guess at the technology. Partner with the team that has already done the heavy lifting. Book a discovery call.

In our recent article, Apps in ChatGPT: Higher Conversions or Lost Users, we explored the strategic implications of OpenAI’s shift toward becoming a platform. We debated the business pros and cons, and whether owning the interface beats the massive distribution of the ChatGPT ecosystem.

But strategy is only half the battle. We wanted to see exactly what this looks like in practice.

So, in case you’ve decided that this is a solution for you, or you just want to know in more detail what it takes to get running, here is our technical investigation. Our engineering team took a deep dive into the new Apps SDK to see how third-party developers can embed interactive UI and logic directly inside ChatGPT conversations.

Here is what we found.

To understand what we are building, we first need to look at ChatGPT Apps from a user’s perspective. Here is how it normally goes: after entering the ChatGPT interface, a user might type something like: ‘Canva, create a social-media post for my product launch’ and the Canva UI appears inside your chat.

As we can see there is a little tag below the text input which shows that ChatGPT recognized an available app from the user's request. You can call the app by prefixing with the app name or ChatGPT can suggest the app during the conversation.

ChatGPT asked to fill the minimum needed information to be able to start a new product.

After being told to fill everything by itself and create something cool and totally random, it wanted to connect to the user's Canva account to be able to save the results.

After giving some permissions Canva is finally connected to the ChatGPT and can start producing example data.

Here Canva created a few examples for the post called ‘NovaX Smart Bottle’, a new smart water bottle (style: bold & energetic, palette: electric blue / black / white). It provided design input for the product:

As you could see, ChatGPT apps are web-based applications embedded within the ChatGPT interface. That is possible using the Apps SDK: developers provide a web UI and backend logic, while ChatGPT handles the conversational orchestration. This allows your app to respond to natural language commands and interact seamlessly with the user within chat.

But first let’s start with the things you need to know before we proceed with the step-by-step implementation journey.

The Model Context Protocol (MCP) server is the foundation of every Apps SDK integration. It exposes tools that the model can call, enforces authentication, and packages the structured data plus component HTML that the ChatGPT client renders inline. Developers can choose between two official SDKs:

These SDKs support frameworks like FastAPI (Python) and Express (TypeScript).

In Apps SDK, tools are the contract between your MCP server and the model. They describe what the connector can do, how to call it, and what data comes back. A well-defined tool includes:

UI components turn structured tool results into a human-friendly interface. Apps SDK components are typically React components that run inside an iframe, communicate with the host via the window.openai API, and render inline with the conversation. These components should be lightweight, responsive, and focused on a single task.

Developing a ChatGPT app begins with setting up a server that leverages one of the official SDKs — Python or TypeScript — typically using frameworks such as FastAPI or Express. This server, often referred to as the MCP server, acts as the bridge between ChatGPT and application logic. Within this environment, developers define tools, which are structured descriptors that outline the actions the app can perform, including the expected input and output formats. These tools form the contract between the app and the model, allowing ChatGPT to invoke the correct functionality based on user commands.

The frontend is implemented as a web-based UI that is embedded within ChatGPT’s chat interface. This UI is usually built with frameworks like React and communicates with the host through the window.openai API. The design of the UI should prioritize responsiveness and simplicity, ensuring a smooth experience for users on both desktop and mobile devices. Authentication mechanisms must also be integrated to verify user identity and maintain session context. Finally, persistent state management ensures that relevant user data and interactions can be stored securely, enabling multi-step or context-sensitive workflows.

Once the app is developed, deploying it requires careful attention to platform requirements and security standards:

Security and privacy are critical considerations when building ChatGPT apps:

Testing a ChatGPT app involves both functional validation and performance monitoring.

ChatGPT apps are inherently web-based and run inside the ChatGPT interface; it is not currently possible to embed fully native mobile code directly within the ChatGPT mobile app. This design has important implications for developers targeting iOS, Android, or cross-platform frameworks such as React Native.

To provide a seamless experience across mobile devices, developers must build a responsive web UI for their app. This UI should adapt to varying screen sizes and orientations, ensuring usability on smartphones and tablets. CSS frameworks, such as Tailwind or Bootstrap, or front-end frameworks like React, Vue, or Svelte, can help achieve a mobile-friendly design while keeping the interface lightweight enough to render smoothly inside ChatGPT.

For teams that already maintain a mobile app — such as a React Native application — ChatGPT apps can serve as a subset or extension of the full-feature app. Users may start with the ChatGPT-embedded web interface and, if additional functionality is required, transition seamlessly to the native app via deep linking. This approach allows developers to leverage the ChatGPT user base while still offering richer experiences in their native apps.

There are, however, some constraints and considerations to be aware of:

Overall, the recommended approach is to treat the ChatGPT app as a mobile-accessible mini-app that provides core functionality, while using deep links or integration with your native app to deliver more advanced features. This hybrid strategy maximizes reach within ChatGPT while preserving the richness and performance of your full mobile experience.

OpenAI is exploring monetization for apps in ChatGPT:

But there are some caveats. Policies are still developing. OpenAI will provide updated announcements later. So, built-in monetization flows may not be immediately available.

This chapter outlines the steps taken to test the Pizza app example from the OpenAI Apps SDK Examples repository. The process served as a proof of concept (PoC) to evaluate the integration of custom widgets and tools within ChatGPT.

The initial step involved cloning the official OpenAI Apps SDK Examples repository:

git clone https://github.com/openai/openai-apps-sdk-examples.git

cd openai-apps-sdk-examples

Subsequently, the necessary dependencies were installed using pnpm:

pnpm install

pre-commit install

These commands set up the required packages for both the frontend components and the backend server.

Before starting the server, it was essential to build the frontend assets. This was accomplished by running:

pnpm run buildThis command generated the necessary HTML, JavaScript, and CSS files within the assets/ directory, which are crucial for rendering the widgets in ChatGPT.

With the frontend assets in place, the next step was to start the backend server using:

pnpm startTo allow ChatGPT to communicate with the locally running server, it was necessary to expose the server to the internet. This was achieved using ngrok, a tool that creates a secure tunnel to localhost:

ngrok http 8000

Ngrok provided a public URL that forwarded requests to the local server:

https://heath-mischievous-nominally.ngrok-free.dev

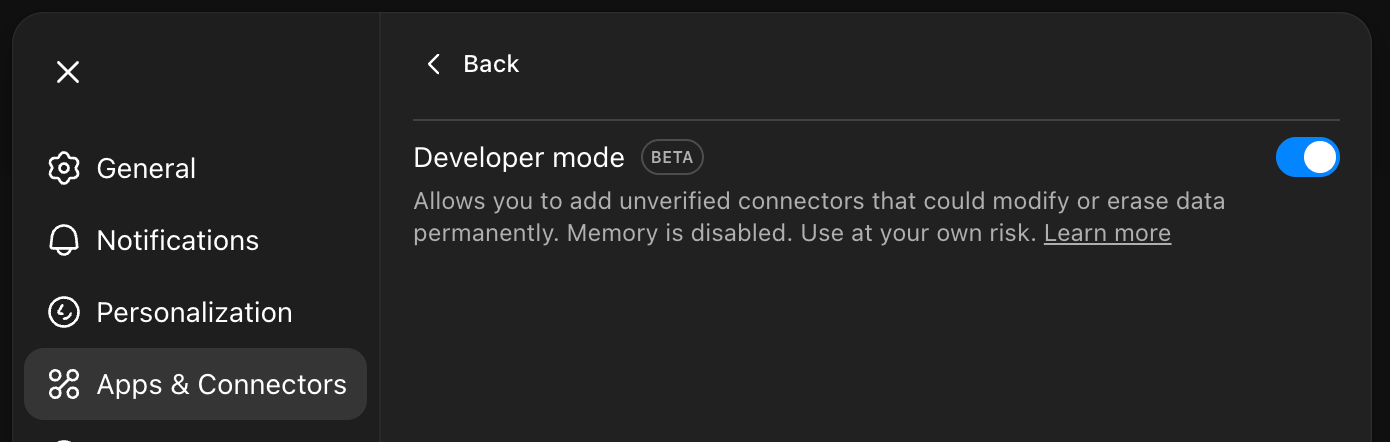

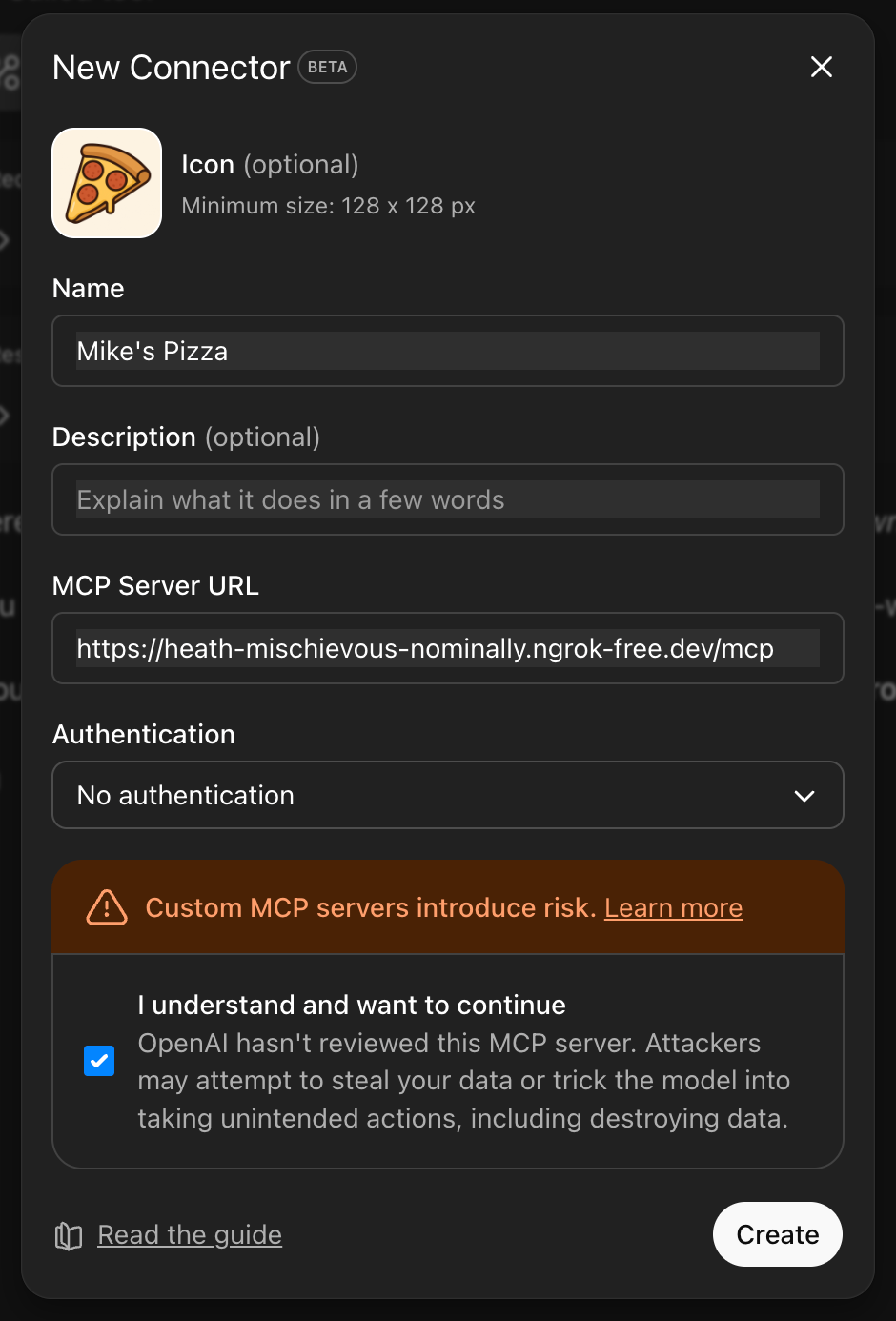

With the server accessible via a public URL, the next step was to configure ChatGPT to recognize the custom app:

After that the app is listed on enabled connectors list:

Also, in app details there is the list of actions this app supports.

After setting up the connector, the app is ready for interaction. In the nearly created chat in the ‘plus’ menu appears ‘More’ menu where Pizza app can be selected.

Now ChatGPT can be asked with the following questions:

ChatGPT responded by invoking the appropriate tools from the Pizza app, rendering interactive widgets like carousels and maps directly within the chat interface.

Images aren’t shown in the chat because not all accounts are granted early access to test widgets in their apps, but if ChatGPT is asked directly to render a pizza carousel widget, it will display the result confirming that the logic is working correctly.

Pizza carousel widget html can be opened in the web browser to make sure everything is working and rendering correctly.

Our testing process provided valuable insights into the integration of custom apps within ChatGPT:

The Apps SDK proves that ChatGPT is rapidly evolving from a chatbot into a platform. Whether you are looking to drive conversions or simply provide better utility to your users, the technical pathway is now open.

If you saw the "Mike's Pizza" example and thought, "My product needs this," we are ready to execute.

NineTwoThree offers the complete development package for ChatGPT Apps:

Don't guess at the technology. Partner with the team that has already done the heavy lifting. Book a discovery call.