According to the 2025 survey report by Cloudera, 96% of enterprise IT leaders stated that AI is at least somewhat integrated into their processes. At the same time, 95% of corporate generative AI pilots fail to deliver measurable ROI.

Both of those things are true simultaneously, and that gap tells you everything you need to know about where most businesses are going wrong. AI adoption is widespread. Successful AI implementation is rare.

This article covers what actually separates the two: how to decide whether an AI idea is worth pursuing, how to know if your organization is ready, and what a realistic AI implementation roadmap looks like in practice. If you want a more granular breakdown with scoring frameworks, and decision trees, we've put all of that into our AI Implementation Guide, which you can download for free.

Before looking forward, it's worth understanding what the data shows about where businesses actually are.

What the 2025 research tells us:

What 2026 is expected to bring:

Reports from Deloitte, IBM, McKinsey, and Gartner converge in the same direction: more investment, more production deployments, more advanced multimodal capabilities, and a tightening regulatory environment.

The direction is clear. How to actually get there is the part most organizations are still figuring out.

The companies that consistently achieve positive ROI from AI share a few things in common, and very few of them are about the technology itself.

Every successful gen AI implementation starts with a specific, costly, and well-understood business problem. Before deciding on anything technical, the first question is whether AI can actually solve the problem at hand.

AI performs reliably on:

AI underperforms on tasks requiring nuanced judgment, ethical reasoning, or contextual creativity. If the task falls into the second category, the ROI case becomes much harder to make, regardless of how sophisticated the model is.

The second filter is measurability. An AI initiative without defined metrics is an initiative without accountability. McKinsey's research shows that companies with clear, quantified ROI targets are far more likely to move from pilot to production.

The most common reason pilots fail to become production systems has nothing to do with the AI model. It's that the surrounding engineering wasn't built to production standards.

Building a demo is straightforward. What separates it from a working product is everything else: handling unexpected inputs, integrating with existing systems, managing data pipelines, writing proper tests, and implementing security protocols.

At enterprise scale, where the average spend per use case is $1.3M (ISG, 2025), the investment demands proper architecture, not fast prototyping.

AI implementation requires people who understand both the technology and the business context, and those two profiles don't always come together.

Beyond technical roles, the part that gets consistently underestimated is change management. An AI product that employees don't adopt or that stakeholders don't trust won't generate returns regardless of how well it performs technically. This organizational layer is as important as the engineering layer.

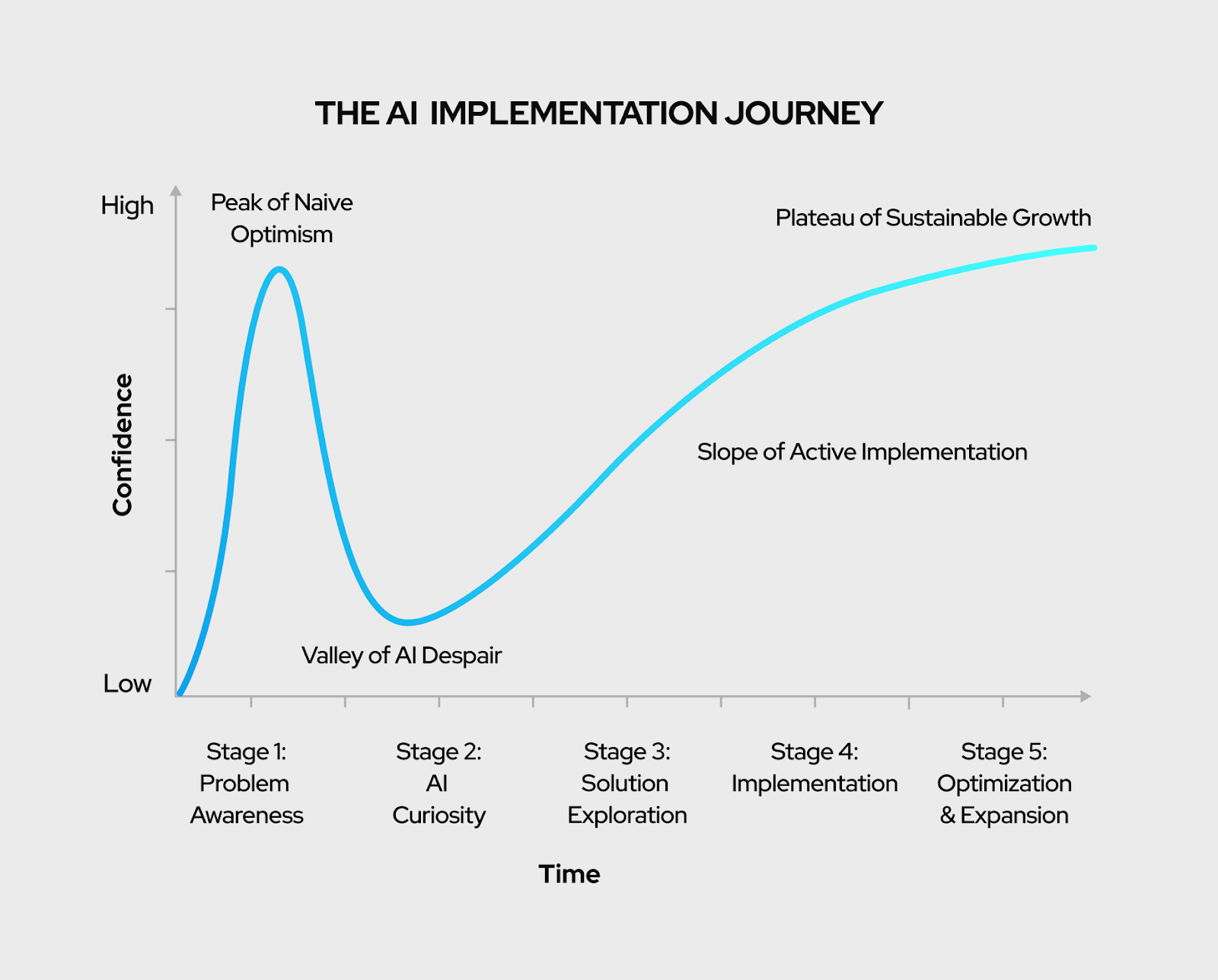

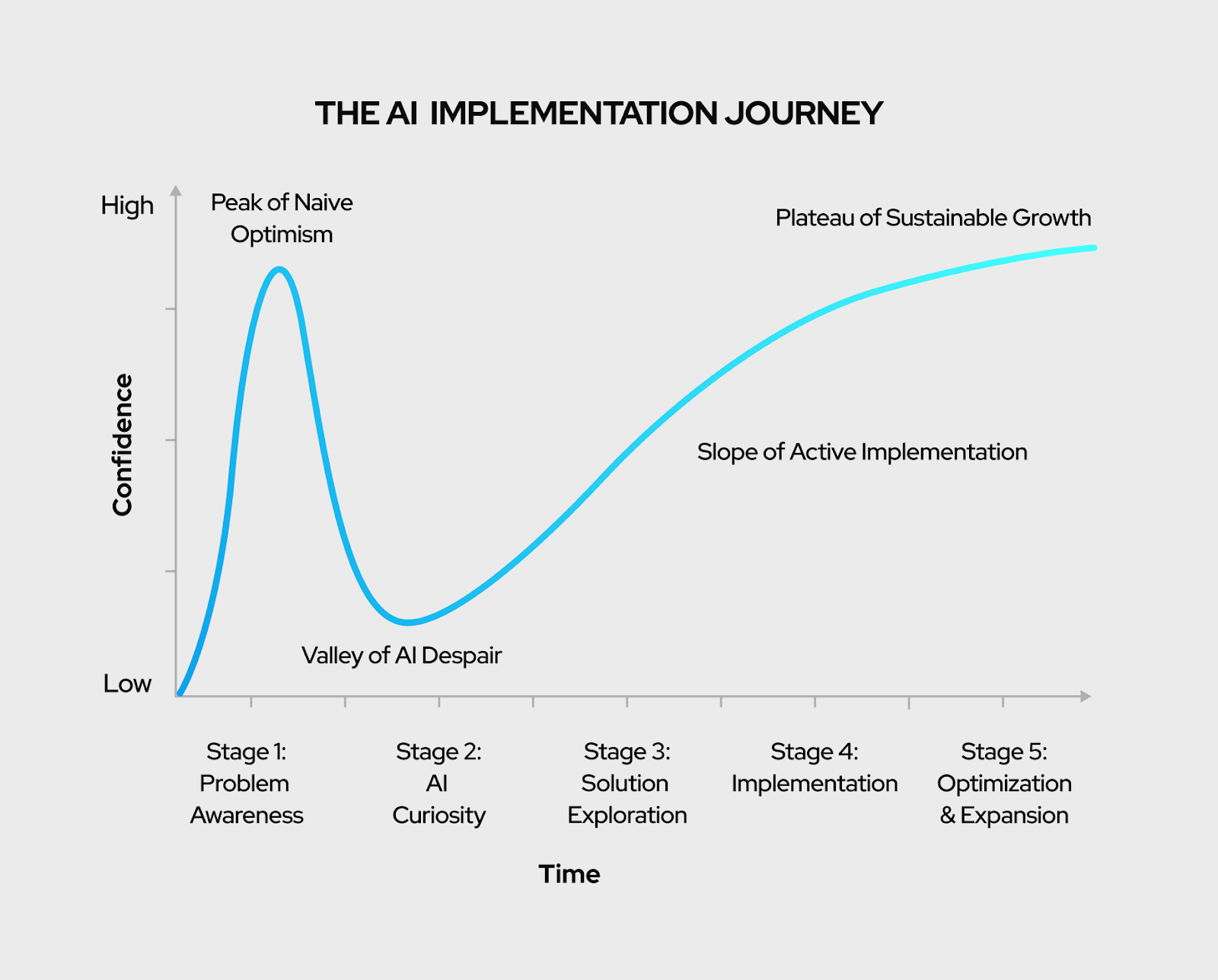

The failure pattern is predictable. Most organizations follow a similar arc:

This gap between a working demo and a working product is what's often called the Valley of AI Despair.

The diagnostic signs are recognizable:

The companies that avoid this pattern don't bypass these challenges. They plan for them at the start rather than discovering them mid-project.

One of the highest-value things you can do before committing to generative AI implementation is a structured vetting process. Not every AI idea is worth building, and skipping this step is one of the primary reasons companies end up with sunk costs and no clear path forward.

A well-vetted AI initiative should pass five filters:

If you want to work through this systematically, our AI project vetting guide includes scoring criteria and decision logic for each filter, and helps you compare multiple ideas to identify where to focus first.

Even a well-vetted AI idea can fail if the organization building it isn't prepared. Readiness is a separate question from whether the idea is good. There are three areas to assess:

Do your current systems support the proposed solution? This includes data storage and access, computing resources, integration capabilities with existing software, and compliance with any relevant regulatory requirements. If your data lives in disconnected silos with no clean access layer, that's a foundation problem to solve before AI development begins.

Do you have people who can build, deploy, and maintain the system? If not, do you have a plan to acquire that expertise through hiring, training, or working with a specialist agency? This also covers who owns the system after it's built and whether that team can operate it long-term.

Is the organization prepared for the process changes that come with AI business integration? That means stakeholder support at the leadership level, user adoption plans, and willingness to adapt existing workflows. Resistance to change and poor stakeholder alignment are among the leading causes of AI implementation failures that have nothing to do with the technology itself.

A structured readiness assessment before starting development can save months of wasted effort. Take NineTwoThree's AI readiness assessment to get a concrete picture of where your gaps are.

Once you've confirmed the idea is worth building and the organization is ready, the implementation process follows a defined sequence. Here is the high-level shape of it:

Define scope, align stakeholders, and validate requirements before committing resources. The output is a specific, buildable specification with agreed success metrics.

Get into the actual data to determine what's technically feasible and develop an architectural roadmap. This is the stage most organizations skip, and skipping it is why they end up stuck later.

Build the product with production-grade engineering practices: version control, QA, security protocols, edge case handling, and regular stakeholder feedback. The goal is a production-ready product, not a prototype.

Deploy, measure real KPIs against the original targets, and get users actively using the product. Adoption is half the work at this stage.

Train your internal team to operate, maintain, and evolve the system without depending on an external partner. Whether that means training existing staff or hiring new engineers, the transition plan should be part of the project from the start.

Most organizations building enterprise AI work with an external partner at some point. The quality of that partnership has an outsized effect on outcomes.

The options break down into five categories:

For organizations building custom AI products with a clear ROI target and defined timeline, a boutique product AI agency typically offers the best combination of speed, technical depth, and business focus.

NineTwoThree has completed over 150 projects for 80+ clients since 2012 across healthcare, logistics, fintech, manufacturing, and media. Our AI consulting services are built around the methodology described here. If you're evaluating partners, a discovery call is a good place to start.

This article gives you the framework. The AI Implementation Guide is the designed, structured reference with more details you can actually work from, share with stakeholders, and return to throughout your project.

It brings together everything covered here, the vetting filters, the readiness pillars, the partner comparison, and the step-by-step process, but in a single, structured, detailed format built for practical implementation. It also includes a direct access to the AI Business Idea Map, which walks you from "we think AI could help here" to a vetted, specific opportunity you can actually scope and build.

If you're serious about how to implement AI in a way that reaches production and delivers measurable returns, it's a great starting point.

According to the 2025 survey report by Cloudera, 96% of enterprise IT leaders stated that AI is at least somewhat integrated into their processes. At the same time, 95% of corporate generative AI pilots fail to deliver measurable ROI.

Both of those things are true simultaneously, and that gap tells you everything you need to know about where most businesses are going wrong. AI adoption is widespread. Successful AI implementation is rare.

This article covers what actually separates the two: how to decide whether an AI idea is worth pursuing, how to know if your organization is ready, and what a realistic AI implementation roadmap looks like in practice. If you want a more granular breakdown with scoring frameworks, and decision trees, we've put all of that into our AI Implementation Guide, which you can download for free.

Before looking forward, it's worth understanding what the data shows about where businesses actually are.

What the 2025 research tells us:

What 2026 is expected to bring:

Reports from Deloitte, IBM, McKinsey, and Gartner converge in the same direction: more investment, more production deployments, more advanced multimodal capabilities, and a tightening regulatory environment.

The direction is clear. How to actually get there is the part most organizations are still figuring out.

The companies that consistently achieve positive ROI from AI share a few things in common, and very few of them are about the technology itself.

Every successful gen AI implementation starts with a specific, costly, and well-understood business problem. Before deciding on anything technical, the first question is whether AI can actually solve the problem at hand.

AI performs reliably on:

AI underperforms on tasks requiring nuanced judgment, ethical reasoning, or contextual creativity. If the task falls into the second category, the ROI case becomes much harder to make, regardless of how sophisticated the model is.

The second filter is measurability. An AI initiative without defined metrics is an initiative without accountability. McKinsey's research shows that companies with clear, quantified ROI targets are far more likely to move from pilot to production.

The most common reason pilots fail to become production systems has nothing to do with the AI model. It's that the surrounding engineering wasn't built to production standards.

Building a demo is straightforward. What separates it from a working product is everything else: handling unexpected inputs, integrating with existing systems, managing data pipelines, writing proper tests, and implementing security protocols.

At enterprise scale, where the average spend per use case is $1.3M (ISG, 2025), the investment demands proper architecture, not fast prototyping.

AI implementation requires people who understand both the technology and the business context, and those two profiles don't always come together.

Beyond technical roles, the part that gets consistently underestimated is change management. An AI product that employees don't adopt or that stakeholders don't trust won't generate returns regardless of how well it performs technically. This organizational layer is as important as the engineering layer.

The failure pattern is predictable. Most organizations follow a similar arc:

This gap between a working demo and a working product is what's often called the Valley of AI Despair.

The diagnostic signs are recognizable:

The companies that avoid this pattern don't bypass these challenges. They plan for them at the start rather than discovering them mid-project.

One of the highest-value things you can do before committing to generative AI implementation is a structured vetting process. Not every AI idea is worth building, and skipping this step is one of the primary reasons companies end up with sunk costs and no clear path forward.

A well-vetted AI initiative should pass five filters:

If you want to work through this systematically, our AI project vetting guide includes scoring criteria and decision logic for each filter, and helps you compare multiple ideas to identify where to focus first.

Even a well-vetted AI idea can fail if the organization building it isn't prepared. Readiness is a separate question from whether the idea is good. There are three areas to assess:

Do your current systems support the proposed solution? This includes data storage and access, computing resources, integration capabilities with existing software, and compliance with any relevant regulatory requirements. If your data lives in disconnected silos with no clean access layer, that's a foundation problem to solve before AI development begins.

Do you have people who can build, deploy, and maintain the system? If not, do you have a plan to acquire that expertise through hiring, training, or working with a specialist agency? This also covers who owns the system after it's built and whether that team can operate it long-term.

Is the organization prepared for the process changes that come with AI business integration? That means stakeholder support at the leadership level, user adoption plans, and willingness to adapt existing workflows. Resistance to change and poor stakeholder alignment are among the leading causes of AI implementation failures that have nothing to do with the technology itself.

A structured readiness assessment before starting development can save months of wasted effort. Take NineTwoThree's AI readiness assessment to get a concrete picture of where your gaps are.

Once you've confirmed the idea is worth building and the organization is ready, the implementation process follows a defined sequence. Here is the high-level shape of it:

Define scope, align stakeholders, and validate requirements before committing resources. The output is a specific, buildable specification with agreed success metrics.

Get into the actual data to determine what's technically feasible and develop an architectural roadmap. This is the stage most organizations skip, and skipping it is why they end up stuck later.

Build the product with production-grade engineering practices: version control, QA, security protocols, edge case handling, and regular stakeholder feedback. The goal is a production-ready product, not a prototype.

Deploy, measure real KPIs against the original targets, and get users actively using the product. Adoption is half the work at this stage.

Train your internal team to operate, maintain, and evolve the system without depending on an external partner. Whether that means training existing staff or hiring new engineers, the transition plan should be part of the project from the start.

Most organizations building enterprise AI work with an external partner at some point. The quality of that partnership has an outsized effect on outcomes.

The options break down into five categories:

For organizations building custom AI products with a clear ROI target and defined timeline, a boutique product AI agency typically offers the best combination of speed, technical depth, and business focus.

NineTwoThree has completed over 150 projects for 80+ clients since 2012 across healthcare, logistics, fintech, manufacturing, and media. Our AI consulting services are built around the methodology described here. If you're evaluating partners, a discovery call is a good place to start.

This article gives you the framework. The AI Implementation Guide is the designed, structured reference with more details you can actually work from, share with stakeholders, and return to throughout your project.

It brings together everything covered here, the vetting filters, the readiness pillars, the partner comparison, and the step-by-step process, but in a single, structured, detailed format built for practical implementation. It also includes a direct access to the AI Business Idea Map, which walks you from "we think AI could help here" to a vetted, specific opportunity you can actually scope and build.

If you're serious about how to implement AI in a way that reaches production and delivers measurable returns, it's a great starting point.